Tracing

Track agent behavior and evaluate performance in real-time with OpenTelemetry-based tracing.

Tracing provides comprehensive observability for your AI agents, automatically capturing execution traces, spans, and performance metrics. All tracing is built on OpenTelemetry standards, so you can monitor agent behavior regardless of implementation language.

Quickstart

Initialize the Tracer

from judgeval.tracer import Tracer

judgment = Tracer(project_name="default_project")import { Judgeval } from "judgeval";

const client = Judgeval.create();

const tracer = await client.nodeTracer.create({

projectName: "default_project",

});Trace your Agent

Tracing captures your agent's inputs, outputs, tool calls, and LLM calls to help you debug and analyze agent behavior.

To properly trace your agent, use @judgment.observe() decorator on all functions and tools of your agent, including LLM calls.

from openai import OpenAI

from judgeval.tracer import Tracer

import time

judgment = Tracer(project_name="default_project")

client = OpenAI()

@judgment.observe(span_type="tool")

def format_task(question: str) -> str:

time.sleep(0.5)

return f"Please answer the following question: {question}"

@judgment.observe(span_type="llm")

def openai_completion(prompt: str) -> str:

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

@judgment.observe(span_type="tool")

def answer_question(prompt: str) -> str:

time.sleep(0.3)

return openai_completion(prompt)

@judgment.observe(span_type="function")

def run_agent(question: str) -> str:

task = format_task(question)

answer = answer_question(task)

return answer

if __name__ == "__main__":

result = run_agent("What is the capital of the United States?")

print(result)To properly trace your agent, use tracer.observe(...) to wrap all functions and tools of your agent, including LLM calls.

import { Judgeval } from "judgeval";

import OpenAI from "openai";

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

const client = Judgeval.create();

const tracer = await client.nodeTracer.create({

projectName: "default_project",

});

const runAgent = tracer.observe(async function runAgent(

question: string

): Promise<string> {

const task = await formatTask(question);

const answer = await answerQuestion(task);

return answer;

},

"function");

const formatTask = tracer.observe(async function formatTask(

question: string

): Promise<string> {

await new Promise((resolve) => setTimeout(resolve, 500));

return `Please answer the following question: ${question}`;

},

"tool");

const answerQuestion = tracer.observe(async function answerQuestion(

prompt: string

): Promise<string> {

await new Promise((resolve) => setTimeout(resolve, 300));

return await openAICompletion(prompt);

},

"tool");

const openAICompletion = tracer.observe(async function openAICompletion(

prompt: string

): Promise<string> {

const response = await openai.chat.completions.create({

model: "gpt-4o-mini",

messages: [{ role: "user", content: prompt }],

});

return response.choices[0]?.message.content || "No answer";

},

"llm");

await runAgent("What is the capital of the United States?");

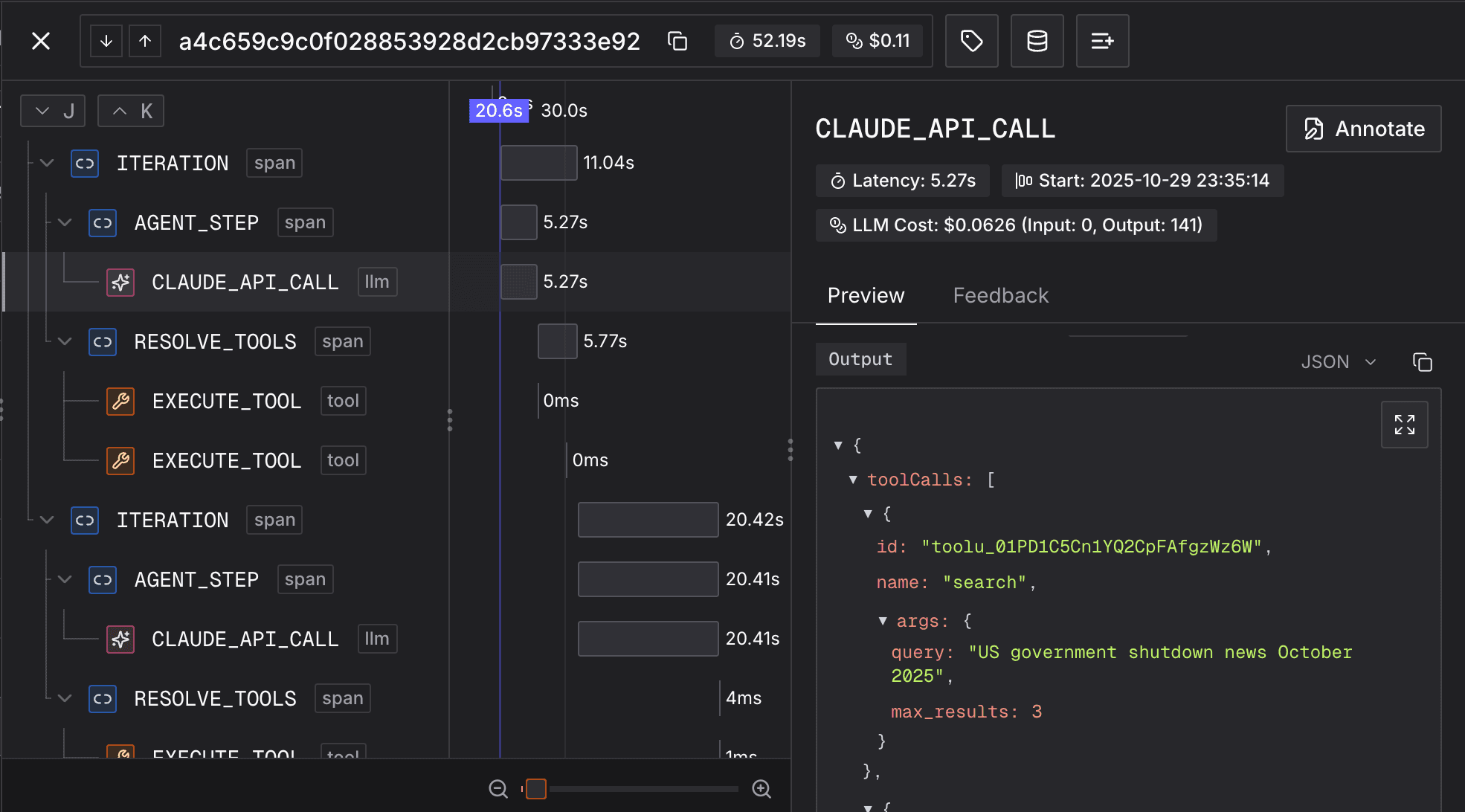

await tracer.shutdown();Congratulations! You've just created your first trace. It should look like this:

What Gets Captured

The Tracer automatically captures comprehensive execution data:

- Execution Flow: Function call hierarchy, execution duration, and parent-child span relationships

- LLM Interactions: Model parameters, prompts, responses, token usage, and cost per API call

- Agent Behavior: Tool usage, function inputs/outputs, state changes, and error states

- Performance Metrics: Latency per span, total execution time, and cost tracking

Auto-Instrumentation

Auto-instrumentation automatically traces LLM client calls without manually wrapping each call with observe(). This reduces boilerplate code and ensures all LLM interactions are captured.

Python supports auto-instrumentation through the wrap() function. It automatically tracks all LLM API calls including token usage, costs, and streaming responses for both sync and async clients.

Refer to Model Providers for more information on supported providers.

from judgeval.tracer import Tracer, wrap

from openai import OpenAI

judgment = Tracer(project_name="default_project")

# Wrap the client to automatically trace all calls

client = wrap(OpenAI())

@judgment.observe(span_type="function")

def ask_question(question: str) -> str:

# This call is automatically traced

response = client.chat.completions.create(

model="gpt-4o-mini",

messages=[{"role": "user", "content": question}]

)

return response.choices[0].message.content

result = ask_question("What is the capital of France?")

print(result)To correctly implement auto-instrumentation on LLM calls, you need to do all of the following:

- Initialize an instrumentation file to be preloaded before the application starts.

- Import the tracer from the instrumentation file in the application.

- Bundle your application using CommonJS.

import { OpenAIInstrumentation } from "@opentelemetry/instrumentation-openai";

import { Judgeval, type NodeTracer } from "judgeval";

export const client = Judgeval.create();

const initTracerPromise = client.nodeTracer

.create({

projectName: "auto_instrumentation_example",

enableEvaluation: true,

enableMonitoring: true,

instrumentations: [new OpenAIInstrumentation()],

})

.then((t: NodeTracer) => {

return t;

});

export async function getTracer(): Promise<NodeTracer> {

return await initTracerPromise;

}import { Example } from "judgeval";

import OpenAI from "openai";

import { client, getTracer } from "./instrumentation";

function requireEnv(name: string): string {

const value = process.env[name];

if (!value) {

throw new Error(`Environment variable ${name} is not set`);

}

return value;

}

const OPENAI_API_KEY = requireEnv("OPENAI_API_KEY");

const openai = new OpenAI({

apiKey: OPENAI_API_KEY,

});

async function _chatWithUser(userMessage: string): Promise<string> {

const messages: OpenAI.Chat.ChatCompletionMessageParam[] = [

{ role: "system", content: "You are a helpful assistant." },

{ role: "user", content: userMessage },

];

const completion = await openai.chat.completions.create({

model: "gpt-4o-mini",

messages,

});

const result = completion.choices[0].message.content || "";

console.log(`User: ${userMessage}`);

console.log(`Assistant: ${result}`);

const tracer = await getTracer();

tracer.asyncEvaluate(

client.scorers.builtIn.answerRelevancy(),

Example.create({

input: "What is the capital of France?",

actual_output: result,

})

);

return result;

}

(async () => {

const tracer = await getTracer();

const chatWithUser = tracer.observe(_chatWithUser);

const result = await chatWithUser("What is the capital of France?");

console.log(result);

await new Promise((resolve) => setTimeout(resolve, 10000));

await tracer.shutdown();

})();OpenTelemetry Integration

Judgment's tracing is built on OpenTelemetry, the industry-standard observability framework. This means:

Standards Compliance

- Compatible with existing OpenTelemetry tooling

- Follows OTEL semantic conventions

- Integrates with OTEL collectors and exporters

Advanced Configuration

You can integrate Judgment's tracer with your existing OpenTelemetry setup:

from judgeval.tracer import Tracer

from opentelemetry.sdk.trace import TracerProvider

tracer_provider = TracerProvider()

# Initialize with OpenTelemetry resource attributes

tracer = Tracer(

project_name="default_project",

resource_attributes={

"service.name": "my-ai-agent",

"service.version": "1.2.0",

"deployment.environment": "production"

}

)

# Connect to your existing OTEL infrastructure

tracer_provider.add_span_processor(tracer.get_processor())

otel_tracer = tracer_provider.get_tracer(__name__)

# Use native OTEL spans alongside Judgment decorators

def process_request(question: str) -> str:

with otel_tracer.start_as_current_span("process_request_span") as span:

span.set_attribute("input", question)

answer = answer_question(question)

span.set_attribute("output", answer)

return answerResource Attributes

Resource attributes describe the entity producing telemetry data. Common attributes include:

service.name- Name of your serviceservice.version- Version numberdeployment.environment- Environment (production, staging, etc.)service.namespace- Logical grouping

See the OpenTelemetry Resource specification for standard attributes.

Multi-Agent System Tracing

Track which agent is responsible for each tool call in complex multi-agent systems.

Example Multi-Agent System

from planning_agent import PlanningAgent

if __name__ == "__main__":

planning_agent = PlanningAgent("planner-1")

goal = "Build a multi-agent system"

result = planning_agent.invoke_agent(goal)

print(result)from judgeval.tracer import Tracer

judgment = Tracer(project_name="multi-agent-system")from utils import judgment

from research_agent import ResearchAgent

from task_agent import TaskAgent

class PlanningAgent:

def __init__(self, id):

self.id = id

@judgment.agent() # Only on entry point

@judgment.observe()

def invoke_agent(self, goal):

print(f"Agent {self.id} is planning for goal: {goal}")

research_agent = ResearchAgent("Researcher1")

task_agent = TaskAgent("Tasker1")

research_results = research_agent.invoke_agent(goal)

task_result = task_agent.invoke_agent(research_results)

return f"Results from planning and executing for goal '{goal}': {task_result}"

@judgment.observe() # No @judgment.agent() needed

def random_tool(self):

passfrom utils import judgment

class ResearchAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, topic):

return f"Research notes for topic: {topic}: Findings and insights include..."from utils import judgment

class TaskAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, task):

result = f"Performed task: {task}, here are the results: Results include..."

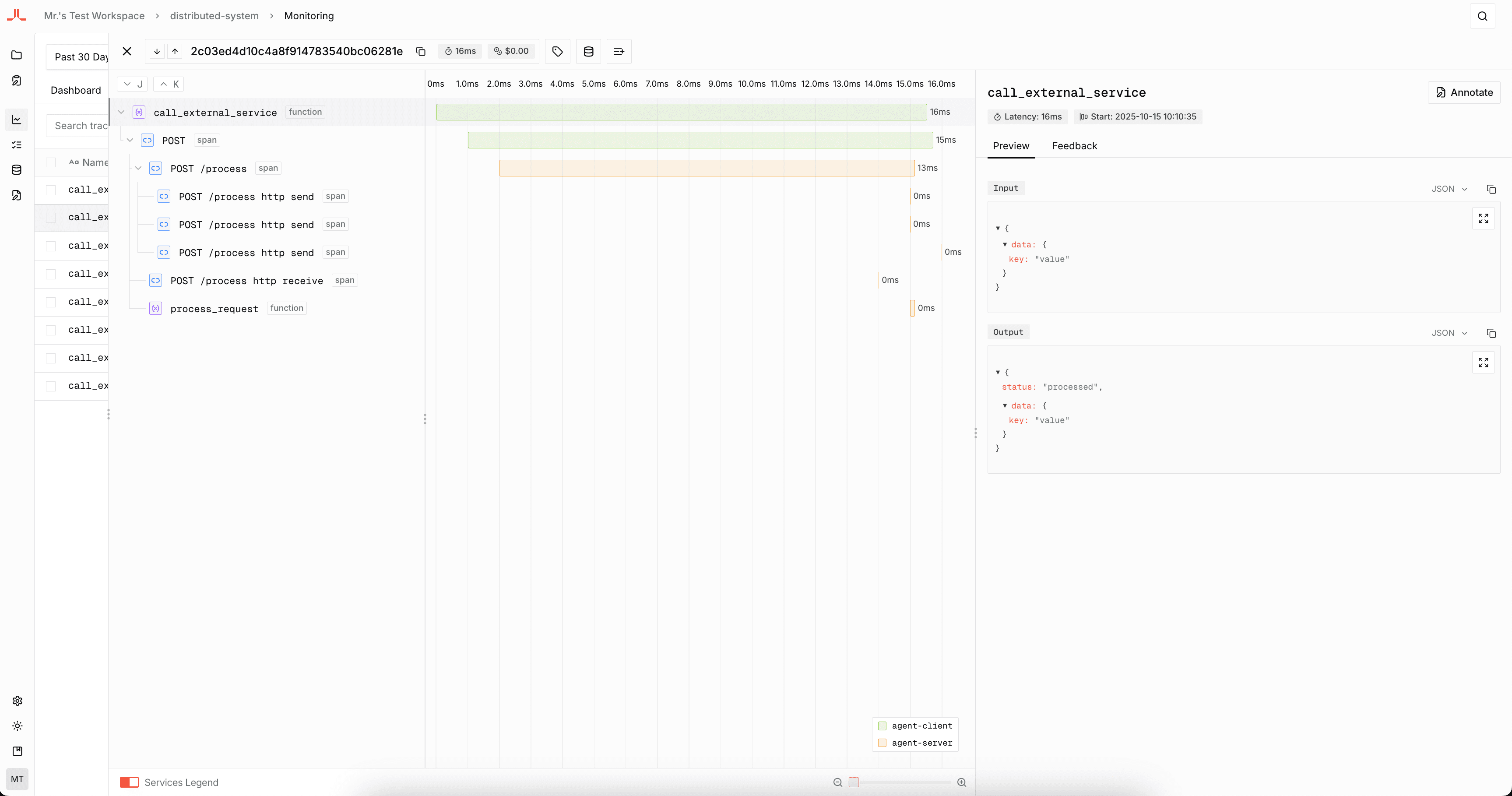

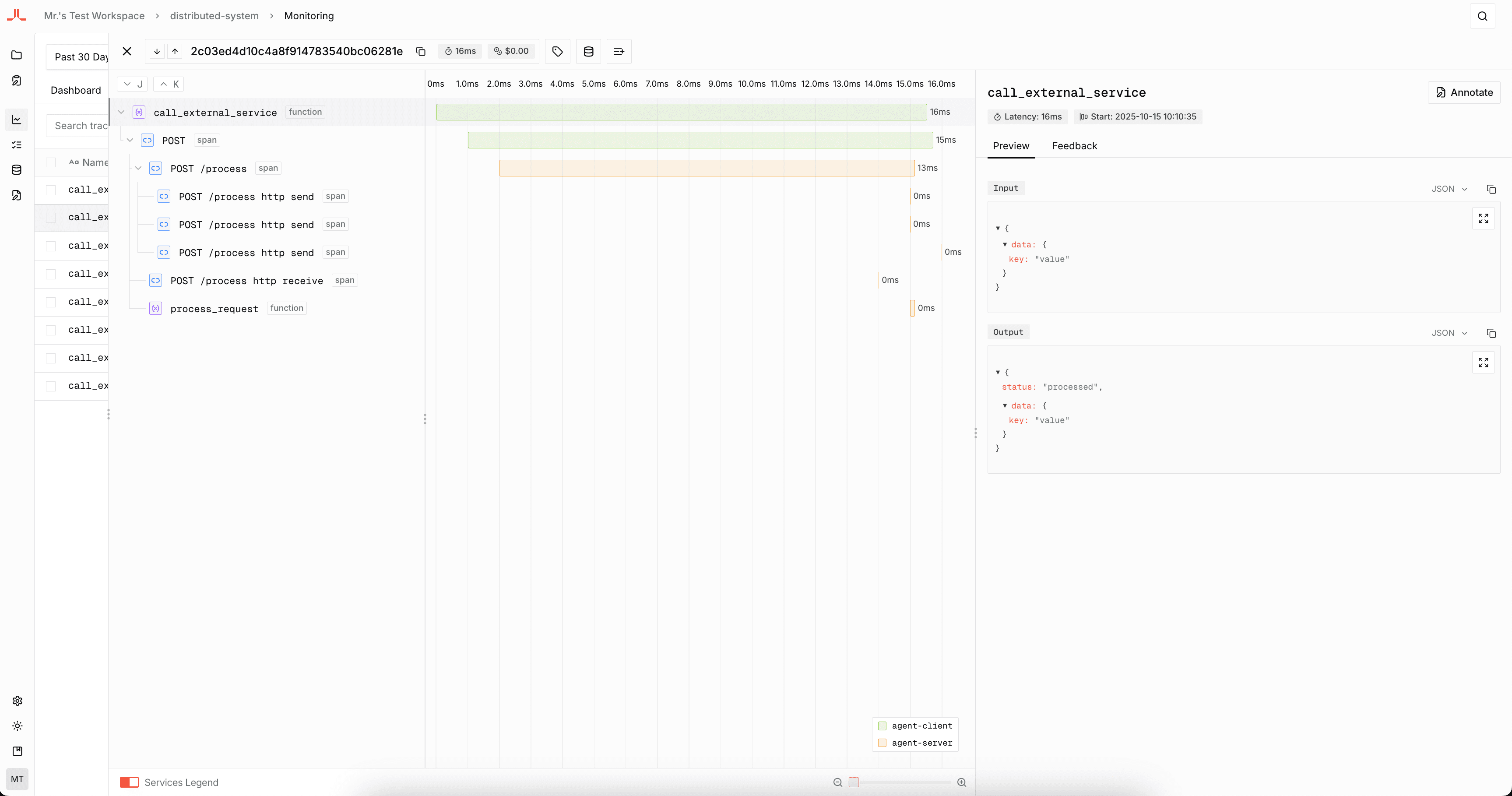

return resultThe trace clearly shows which agent called which method:

Distributed Tracing

Distributed tracing allows you to track requests across multiple services and systems, providing end-to-end visibility into complex workflows. This is essential for understanding how your AI agents interact with external services and how data flows through your distributed architecture.

Sending Trace State

When your agent needs to propagate trace context to downstream services, you can manually extract and send trace context.

uv add judgeval requestspip install judgeval requestsfrom judgeval.tracer import Tracer

from opentelemetry.propagate import inject

import requests

judgment = Tracer(

project_name="distributed-system",

resource_attributes={"service.name": "agent-client"},

)

@judgment.observe(span_type="function")

def call_external_service(data):

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer ...",

}

inject(headers)

response = requests.post(

"http://localhost:8001/process",

json=data,

headers=headers

)

return response.json()

if __name__ == "__main__":

result = call_external_service({"query": "Hello from client"})

print(result)npm install judgeval @opentelemetry/apiyarn add judgeval @opentelemetry/apipnpm add judgeval @opentelemetry/apibun add judgeval @opentelemetry/apiimport { context, propagation } from "@opentelemetry/api";

import { Judgeval } from "judgeval";

const client = Judgeval.create();

const tracer = await client.nodeTracer.create({

projectName: "distributed-system",

resourceAttributes: { "service.name": "agent-client" },

});

async function makeRequest(url: string, options: RequestInit = {}): Promise<any> {

const headers: Record<string, string> = {};

propagation.inject(context.active(), headers);

const response = await fetch(url, {

...options,

headers: { "Content-Type": "application/json", ...headers },

});

if (!response.ok) {

throw new Error(`HTTP error! status: ${response.status}`);

}

return response.json();

}

const callExternalService = tracer.observe(async function (data: any) {

return await makeRequest("http://localhost:8001/process", {

method: "POST",

body: JSON.stringify(data),

});

}, "span");

const result = await callExternalService({ message: "Hello!" });

console.log(result);

await tracer.shutdown();Receiving Trace State

When your service receives requests from other services, you can use middleware to automatically extract and set the trace context for all incoming requests.

uv add judgeval fastapi uvicornpip install judgeval fastapi uvicornfrom judgeval.tracer import Tracer

from opentelemetry.propagate import extract

from opentelemetry import context as otel_context

from opentelemetry.instrumentation.fastapi import FastAPIInstrumentor

from fastapi import FastAPI, Request

judgment = Tracer(

project_name="distributed-system",

resource_attributes={"service.name": "agent-server"},

)

app = FastAPI()

FastAPIInstrumentor.instrument_app(app)

@app.middleware("http")

async def trace_context_middleware(request: Request, call_next):

ctx = extract(dict(request.headers))

token = otel_context.attach(ctx)

try:

response = await call_next(request)

return response

finally:

otel_context.detach(token)

@judgment.observe(span_type="function")

def process_request(data):

return {"message": "Hello from Python server!", "received_data": data}

@app.post("/process")

async def handle_process(request: Request):

result = process_request(await request.json())

return result

if __name__ == "__main__":

import uvicorn

uvicorn.run(app, host="0.0.0.0", port=8001)npm install judgeval @opentelemetry/api expressyarn add judgeval @opentelemetry/api expresspnpm add judgeval @opentelemetry/api expressbun add judgeval @opentelemetry/api expressimport express from "express";

import { Judgeval } from "judgeval";

import { context, propagation } from "@opentelemetry/api";

const client = Judgeval.create();

const tracer = await client.nodeTracer.create({

projectName: "distributed-system",

resourceAttributes: { "service.name": "agent-server" },

});

const app = express();

app.use(express.json());

app.use((req, res, next) => {

const parentContext = propagation.extract(context.active(), req.headers);

context.with(parentContext, () => {

next();

});

});

const processRequest = tracer.observe(async function (data: any) {

return { message: "Hello from server!", received_data: data };

}, "span");

app.post("/process", async (req, res) => {

const result = await processRequest(req.body);

res.json(result);

});

app.listen(8001, () => console.log("Server running on port 8001"));

Toggling Monitoring

If your setup requires you to toggle monitoring intermittently, you can disable monitoring by:

- Setting the

JUDGMENT_MONITORINGenvironment variable tofalse(Disables tracing)

export JUDGMENT_MONITORING=false- Setting the

JUDGMENT_EVALUATIONSenvironment variable tofalse(Disables scoring on traces)

export JUDGMENT_EVALUATIONS=falseNext Steps

- Tracer SDK Reference - Explore the complete Tracer API including span access, metadata, and advanced configuration.