Introduction to Agent Scorers

How to build and use scorers to track agent behavioral regressions

Evaluation provides tools to measure agent behavior, prevent regressions, and maintain quality at scale. By combining custom scorers, prompt-based evaluation, datasets, and testing frameworks, you can systematically track behavioral drift before it impacts production.

Prompt Scorers

Prompt Scorers use natural language rubrics for LLM-as-judge evaluation.

- Define criteria using plain language on the platform

- TracePromptScorers evaluate full traces instead of individual examples

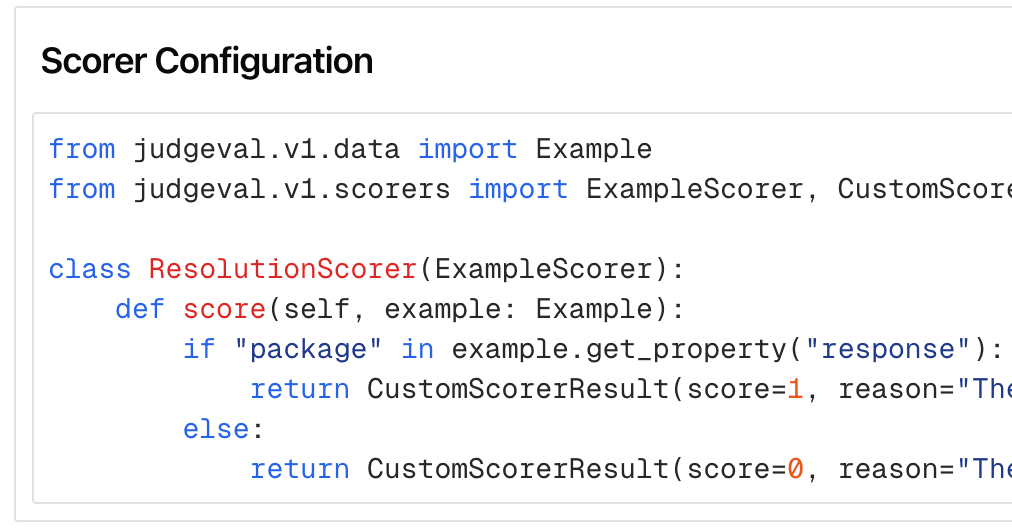

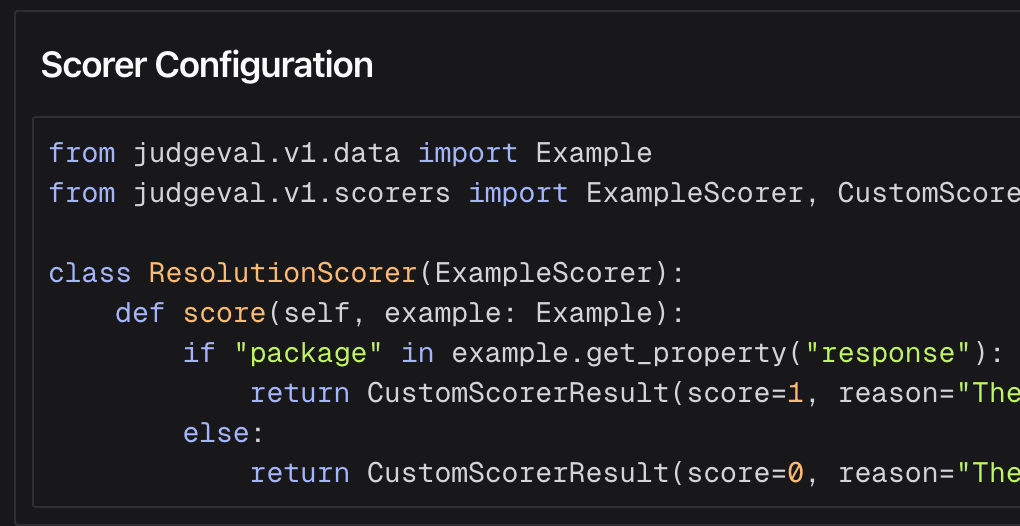

Custom Scorers

Custom Scorers implement arbitrary scoring logic in Python code.

- Full flexibility with any LLM, library, or custom logic

- Server-hosted execution for production monitoring

Regression Testing

Regression Testing runs evals as unit tests in CI/CD pipelines.

- Integrates with pytest and standard testing frameworks

- Automatically fails when scores drop below thresholds

Quickstart

Build and test scorers:

from judgeval import Judgeval

from judgeval.v1.data.example import Example

client = Judgeval(project_name="default_project")

# Retrieve a PromptScorer created on the platform

# Create the scorer on the Judgment platform first with your rubric

scorer = client.scorers.prompt_scorer.get(name="AccuracyScorer")

# Test your rubric on examples

test_examples = [

Example.create(

input="What is the capital of the United States?",

actual_output="The capital of the U.S. is Washington, D.C."

),

Example.create(

input="What is the capital of the United States?",

actual_output="I think it's New York City."

)

]

# Test your rubric

results = client.evaluation.create().run(

examples=test_examples,

scorers=[scorer],

eval_run_name="accuracy_test"

)Next Steps

- Custom Scorers - Code-defined scorers using any LLM or library dependency

- Prompt Scorers - LLM-as-a-judge scorers defined by custom rubrics on the platform

- Monitor Agent Behavior in Production - Use scorers to monitor your agents performance in production.