Prompt Scorers

Use natural language rubrics to evaluate agent behavior in your LLM system.

Prompt Scorers use natural language rubrics to evaluate agent behavior in your LLM system.

Create a Prompt Scorer

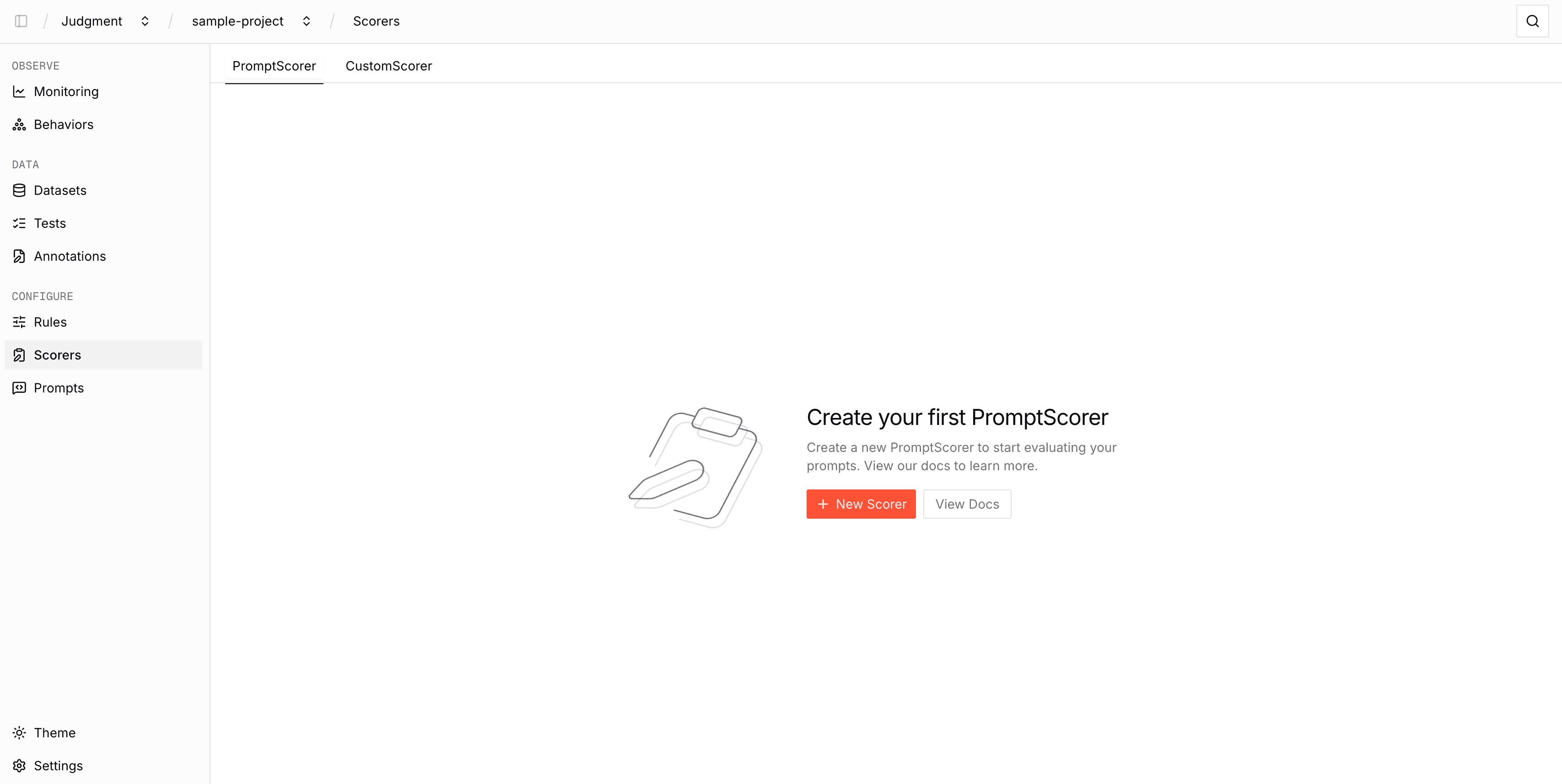

Navigate to Scorers

Within your project, go to the Scorers tab in the sidebar and select the PromptScorer section.

Create your scorer

Click New Scorer and enter a name. By default, the scorer will evaluate on Examples.

Then, click Next to configure the scorer.

Configure the scorer

Model: Choose your judge model

Prompt: Define your evaluation criteria (supports {{ mustache template }} variables)

Threshold: Scores above this value are considered successes

Choice Scorers (optional): Map specific choices to scores

Test the scorer

Test your Prompt Scorer with custom inputs. Enter your test data and click Run Scorer.

You'll see the judge's score, reasoning, and choice in the output.

Trace Prompt Scorers

A Trace Prompt Scorer evaluates a full trace or subtree rather than an Example that requires specified inputs. Use this when you need multiple trace spans as context for your judge.

Configure the scorer

When configuring the prompt of a Trace Prompt Scorer, you do not need to use the mustache template variables since there are no set input fields. Instead, assume that the input will be a full trace.

Test the scorer

Use the playground to sanity check and iterate on your prompt scorers against real traces before you deploy to production.

Select a trace Dataset, optionally filter specific traces, and run your scorer to view results.

Scorer Comparisons

Test scorer variations side-by-side before you commit changes.

Duplicate the scorer

Click the + button on the right side of the page to make a duplicate comparison draft of your scorer

Modify the comparison draft

You can modify the model, prompt, or settings in your comparison draft.

Run the scorers

Given a test input, run both scorers simultaneously to compare results for each comparison.

Update the main scorer

When you find a better configuration, click Update Main to promote it. You can continue iterating, testing, and updating the main scorer until you have an optimal configuration.

Next Steps

- Monitor Agent Behavior in Production - Deploy your scorers for real-time agent evaluation.