Agent Behavior Monitoring

Run real-time checks on your agents' behavior in production.

Agent behavior monitoring provides comprehensive observability and evaluation of your production agents. By combining tracing, scoring, alerting, and bucketing, you can track what your agents do, measure how well they perform, detect when they misbehave, and analyze behavior patterns - all in real-time.

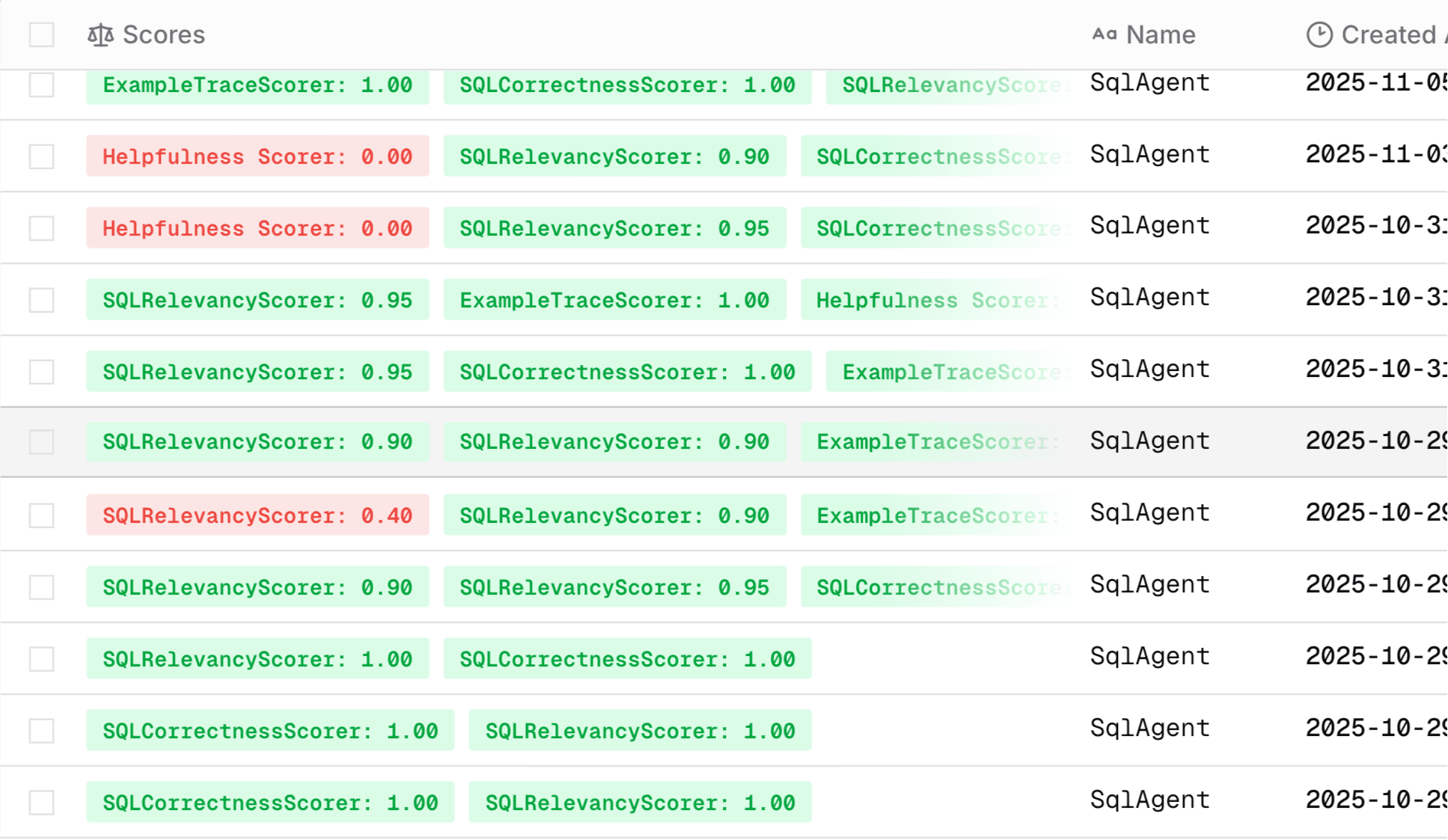

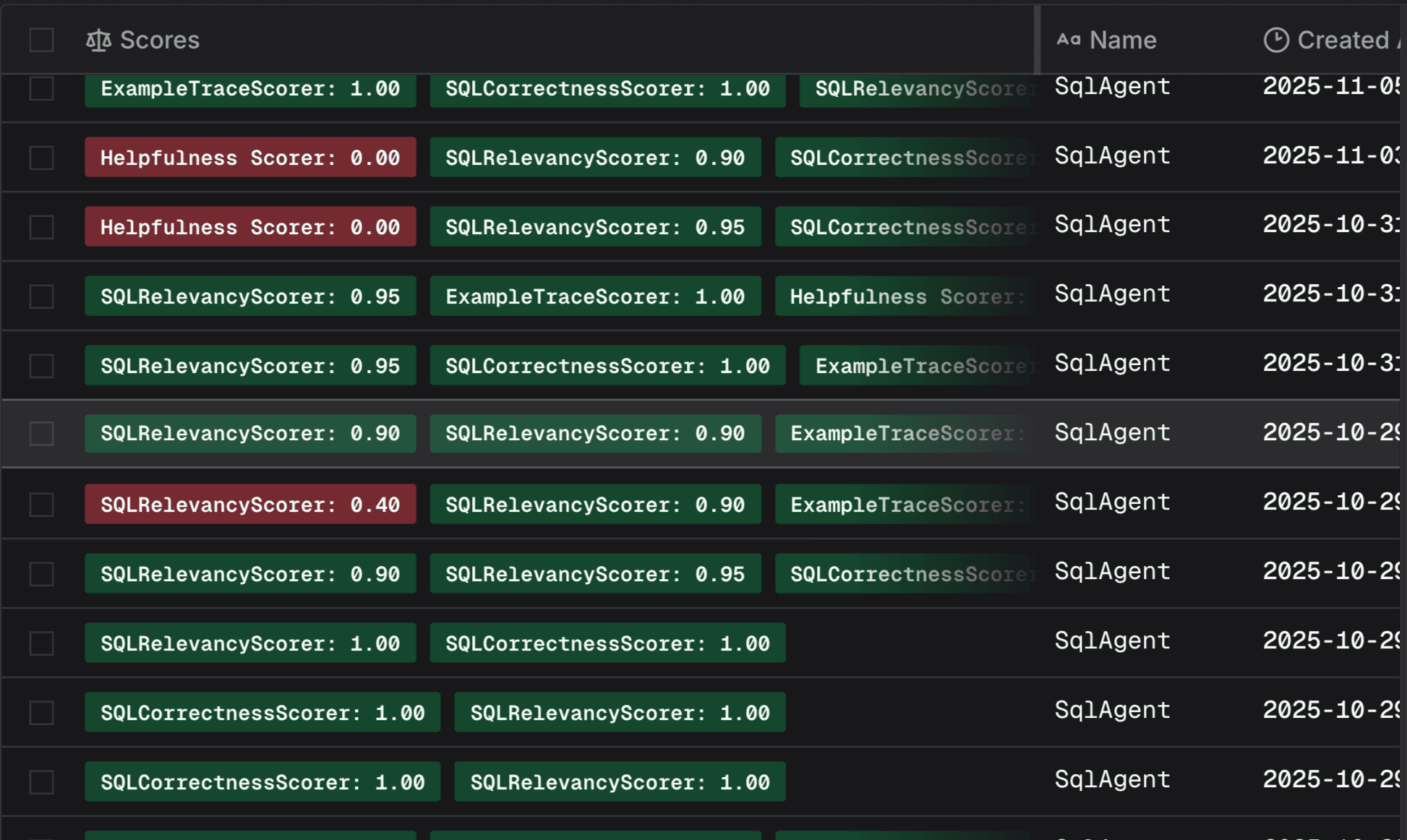

Scoring

Evaluate agent quality with built-in scorers, custom logic, or LLM-as-judge.

- Server-hosted execution with zero latency impact

- Scores individual interactions or full traces

Complete Monitoring Setup

This quickstart demonstrates a complete agent behavior monitoring implementation.

Initialize Tracing

Set up tracing to capture all agent behavior. Tracing is the foundation - it records what your agent does so you can evaluate it.

from judgeval.tracer import Tracer, wrap

from openai import OpenAI

# Initialize tracer for your project

judgment = Tracer(project_name="customer_service")

# Wrap OpenAI client to auto-trace all LLM calls

client = wrap(OpenAI())Create a Custom Scorer

Build scoring logic to evaluate agent behavior. This scorer checks if a customer service agent properly addresses package inquiries.

from judgeval.data import Example

from judgeval.scorers.example_scorer import ExampleScorer

from openai import OpenAI

class CustomerRequest(Example):

request: str

response: str

class PackageInquiryScorer(ExampleScorer):

name: str = "Package Inquiry Scorer"

server_hosted: bool = True

async def a_score_example(self, example: CustomerRequest):

client = OpenAI()

evaluation_prompt = f"""

Evaluate if the customer service response adequately addresses a package inquiry.

Customer request: {example.request}

Agent response: {example.response}

Does the response address package-related concerns? Answer only "YES" or "NO".

"""

completion = client.chat.completions.create(

model="gpt-5-mini",

messages=[{"role": "user", "content": evaluation_prompt}]

)

evaluation = completion.choices[0].message.content.strip().upper()

if evaluation == "YES":

self.reason = "Response appropriately addresses package inquiry"

return 1.0

else:

self.reason = "Response doesn't adequately address package inquiry"

return 0.0Deploy Server-Hosted Scorer

Upload your scorer to run in secure infrastructure with zero latency impact on your agent.

echo -e "pydantic\nopenai" > requirements.txt

judgeval upload_scorer customer_service_scorer.py requirements.txtInstrument Your Agent

Add tracing decorators and online evaluation to your agent code.

from judgeval.tracer import Tracer, wrap

from openai import OpenAI

from customer_service_scorer import PackageInquiryScorer, CustomerRequest

judgment = Tracer(project_name="customer_service")

client = wrap(OpenAI())

class CustomerServiceAgent:

@judgment.observe(span_type="tool")

def handle_request(self, request: str) -> str:

completion = client.chat.completions.create(

model="gpt-5-mini",

messages=[

{"role": "system", "content": "You are a helpful customer service agent."},

{"role": "user", "content": request}

]

)

response = completion.choices[0].message.content

# Evaluate behavior with server-hosted scorer

judgment.async_evaluate(

scorer=PackageInquiryScorer(),

example=CustomerRequest(request=request, response=response),

sampling_rate=0.95

)

return response

@judgment.agent()

@judgment.observe(span_type="function")

def run(self, request: str) -> str:

return self.handle_request(request)

agent = CustomerServiceAgent()

result = agent.run("Where is my package? I ordered it last week.")

print(result)What's happening:

@judgment.observe()captures execution traceswrap(OpenAI())auto-tracks LLM callsjudgment.async_evaluate()scores behavior in real-timesampling_rate=0.95evaluates 95% of requests

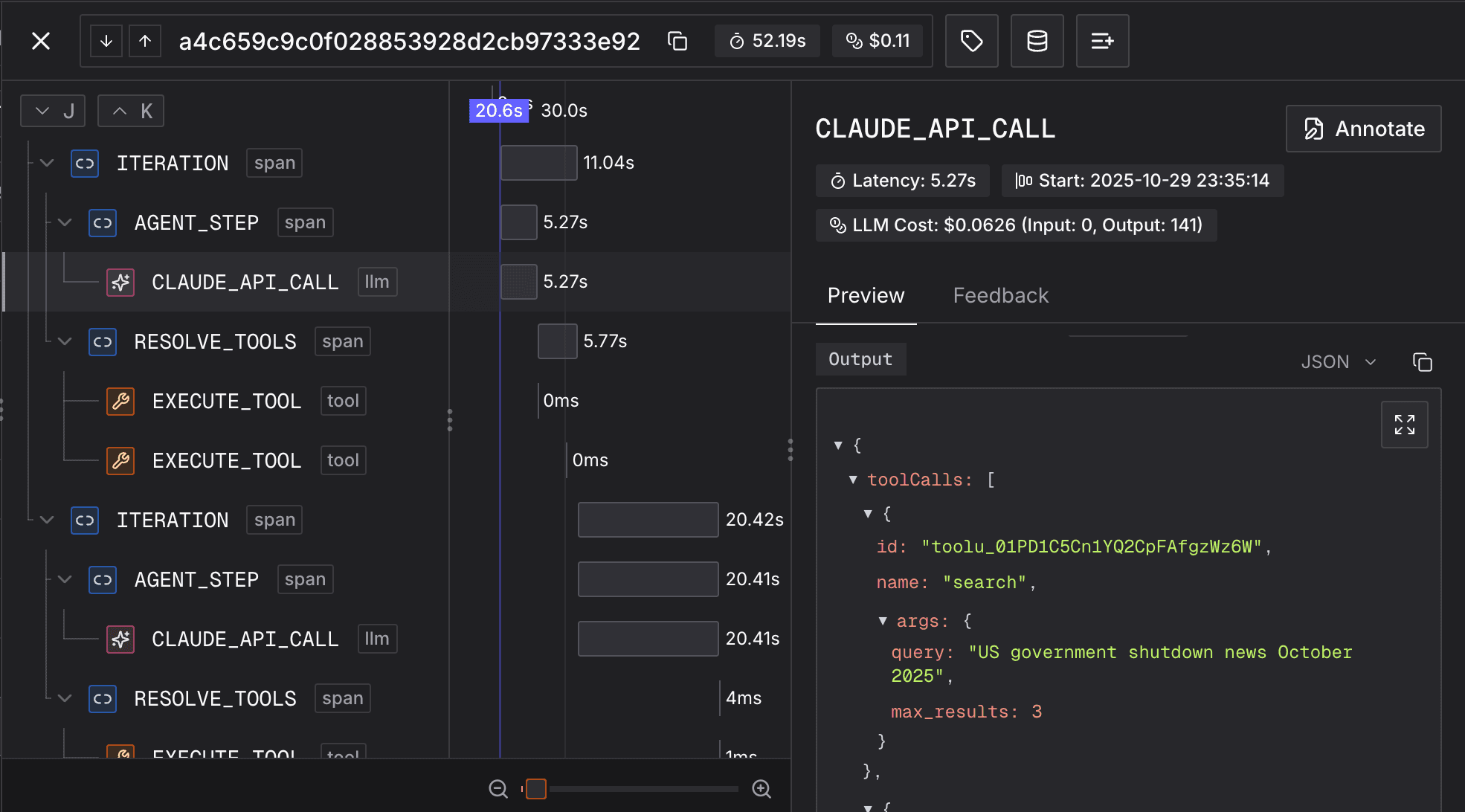

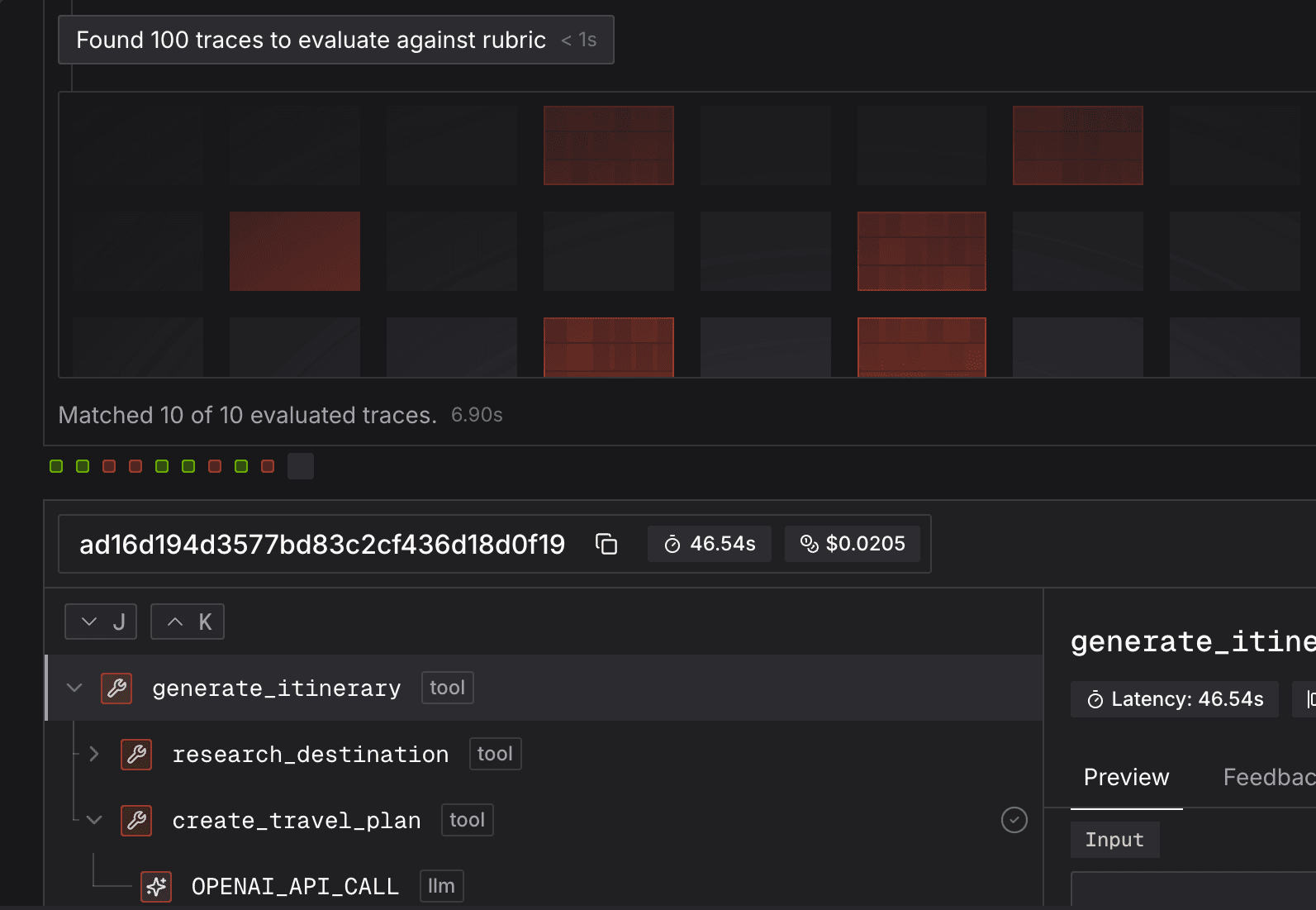

View traces with scoring results in the Judgment platform:

Configure Alerts

Set up a rule with alerts to notify your team when the scorer detects problematic behavior.

Navigate to the Rules page in your project dashboard and create a rule:

Basic Configuration:

- Rule Name:

Package Inquiry Failures - Description:

Alert when agent fails to address package inquiries

Conditions:

- Metric:

Package Inquiry Scorer - Operator:

fails(or< 0.5) - Alert Frequency:

3 times in 5 minutes - Cooldown:

30 minutes

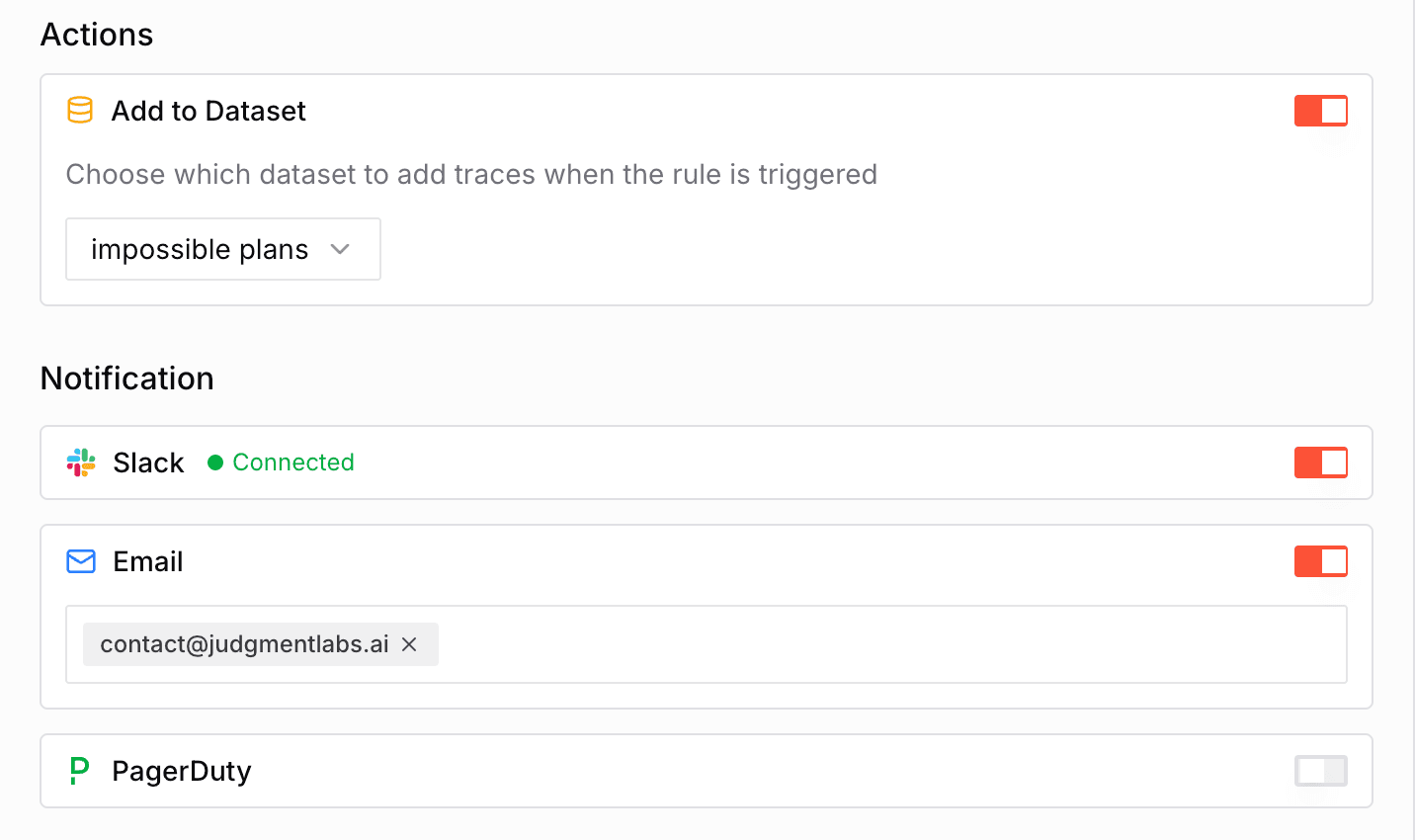

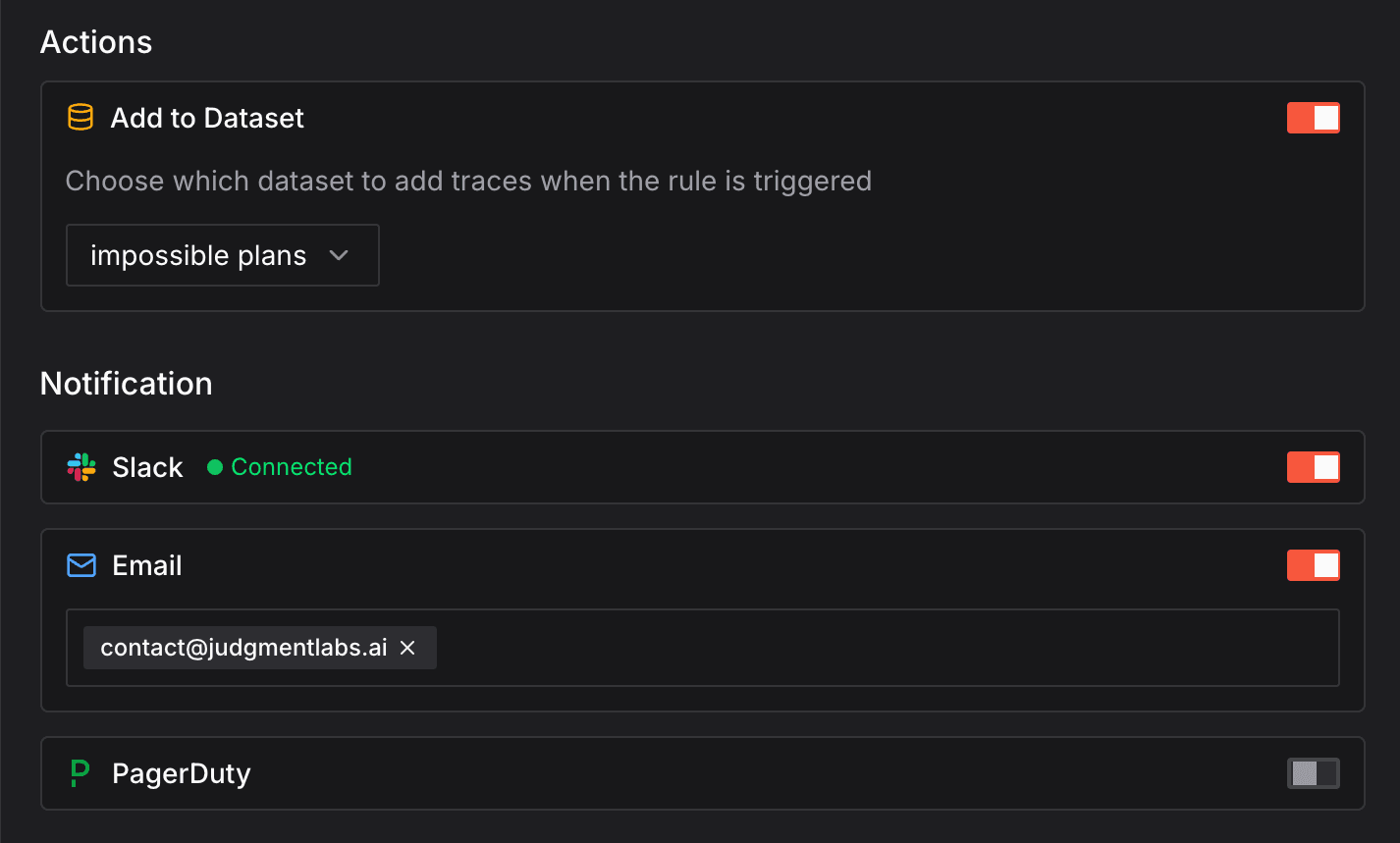

Actions:

- Add to dataset:

package-inquiry-failures - Send Slack notification

- Email: your email address

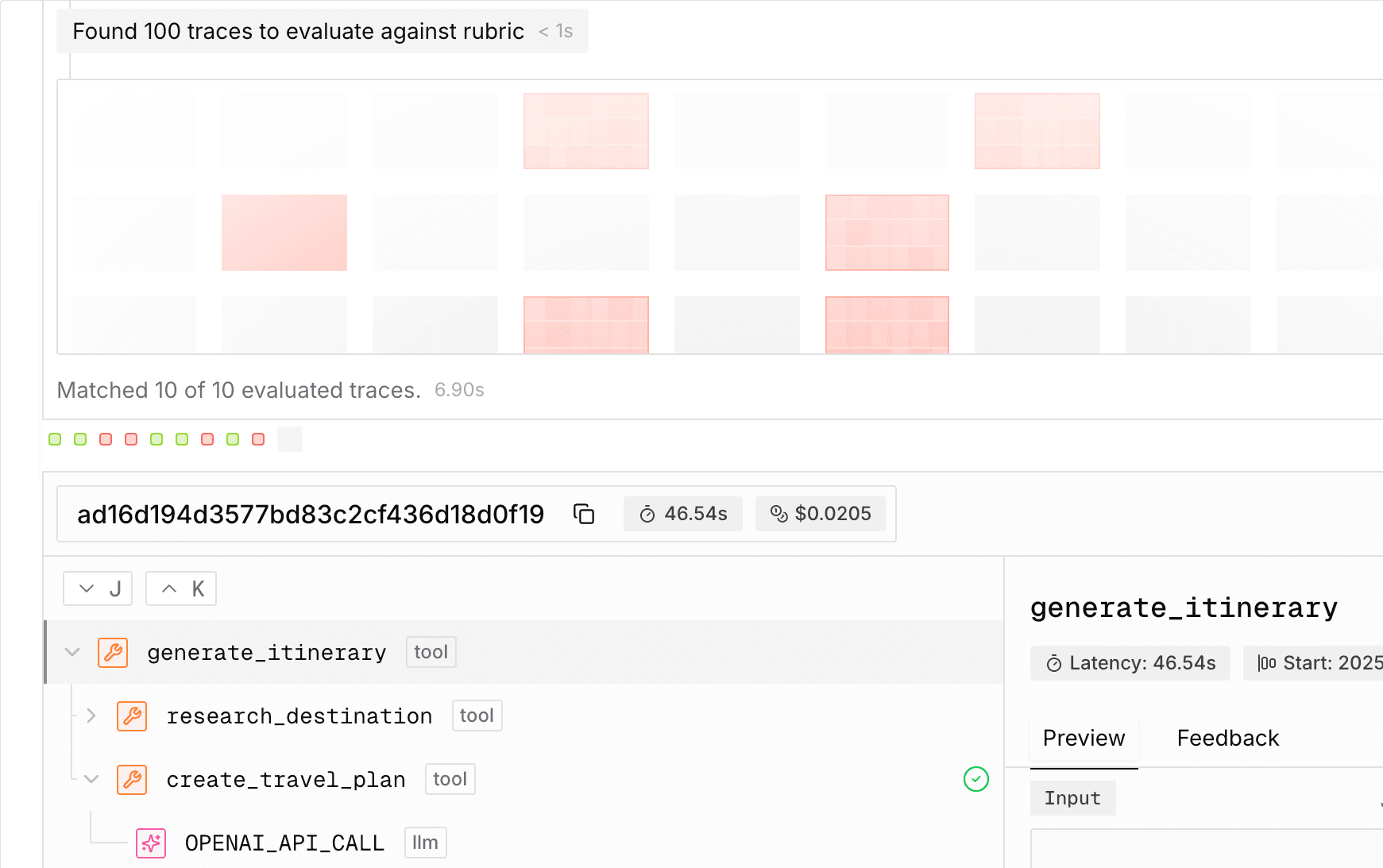

Set Up Bucketing

Configure bucketing to automatically group similar behavioral patterns.

Navigate to Bucketing in the sidebar and create a configuration:

- Describe your agent's purpose to improve bucketing accuracy

- Create a bucketing configuration with a rubric-based classifier

- Review sample traces and mark them as Accept/Reject

- Refine the rubric based on suggested improvements

- Finalize and name your configuration

Bucketing automatically routes matching traces to datasets for pattern analysis.

Your agent now has complete behavior monitoring: tracing captures what it does, scoring evaluates quality, alerts notify about issues, and bucketing groups patterns for analysis.

Advanced Features

Multi-Agent System Monitoring

When monitoring multi-agent systems, behavior tracking becomes more complex as multiple agents coordinate and interact. The @judgment.agent() decorator identifies which agent is responsible for each action, enabling you to:

- Track agent-specific behavior patterns

- Identify which agent caused failures

- Measure individual agent performance

- Analyze inter-agent communication

Here's a complete multi-agent monitoring example:

from planning_agent import PlanningAgent

if __name__ == "__main__":

planning_agent = PlanningAgent("planner-1")

goal = "Build a multi-agent system"

result = planning_agent.plan(goal)

print(result)from judgeval.tracer import Tracer

judgment = Tracer(project_name="multi-agent-system")from utils import judgment

from research_agent import ResearchAgent

from task_agent import TaskAgent

class PlanningAgent:

def __init__(self, id):

self.id = id

@judgment.agent() # Only add @judgment.agent() to the entry point function of the agent

@judgment.observe()

def invoke_agent(self, goal):

print(f"Agent {self.id} is planning for goal: {goal}")

research_agent = ResearchAgent("Researcher1")

task_agent = TaskAgent("Tasker1")

research_results = research_agent.invoke_agent(goal)

task_result = task_agent.invoke_agent(research_results)

return f"Results from planning and executing for goal '{goal}': {task_result}"

@judgment.observe() # No need to add @judgment.agent() here

def random_tool(self):

passfrom utils import judgment

class ResearchAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, topic):

return f"Research notes for topic: {topic}: Findings and insights include..."from utils import judgment

class TaskAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, task):

result = f"Performed task: {task}, here are the results: Results include..."

return resultThe trace clearly shows which agent performed each action:

Each agent's actions are clearly labeled, making it easy to track execution flow and identify which agent caused issues. You can then:

- Create agent-specific scorers to evaluate individual agent behavior

- Set up alerts targeting specific agents

- Use bucketing to group traces by agent behavior patterns

Toggling Monitoring

Control monitoring at runtime using environment variables:

Disable all tracing:

export JUDGMENT_MONITORING=falseDisable scoring only (traces still captured):

export JUDGMENT_EVALUATIONS=falseThis is useful for:

- Development environments where monitoring isn't needed

- High-volume periods where you want to reduce overhead

- Debugging scenarios where you need clean execution

- Cost optimization during testing

Next Steps

Explore each component of agent behavior monitoring in detail:

- Tracing - Deep dive into OpenTelemetry-based tracing, distributed tracing, and advanced instrumentation

- Rules and Alerts - Configure sophisticated alerting rules with frequency thresholds, cooldowns, and multi-channel notifications

- Bucketing - Set up automated behavior classification and pattern analysis

- Custom Scorers - Build advanced scoring logic for your specific use cases

- Prompt Scorers - Use LLM-as-judge patterns for flexible evaluation