Introduction to Judgeval

Judgeval is an evaluation library for AI agents, developed and maintained by Judgment Labs. Designed for AI teams, Judgeval makes it easy to benchmark, test, and improve AI agents, including critical aspects of their behavior such as multi-step reasoning and agent tool calling accuracy.

Getting Started

Ready to dive in? Follow our Getting Started guide to set up Judgeval and run your first evaluations.

Judgeval integrates natively with the Judgment Labs Platform, allowing you to evaluate, unit test, and monitor LLM applications in the cloud.

Judgeval was built by a passionate team of LLM researchers from Stanford, Datadog, and Together AI 💜.

Quickstarts

Running Judgeval for the first time? Check out the quickstarts or cookbooks below.

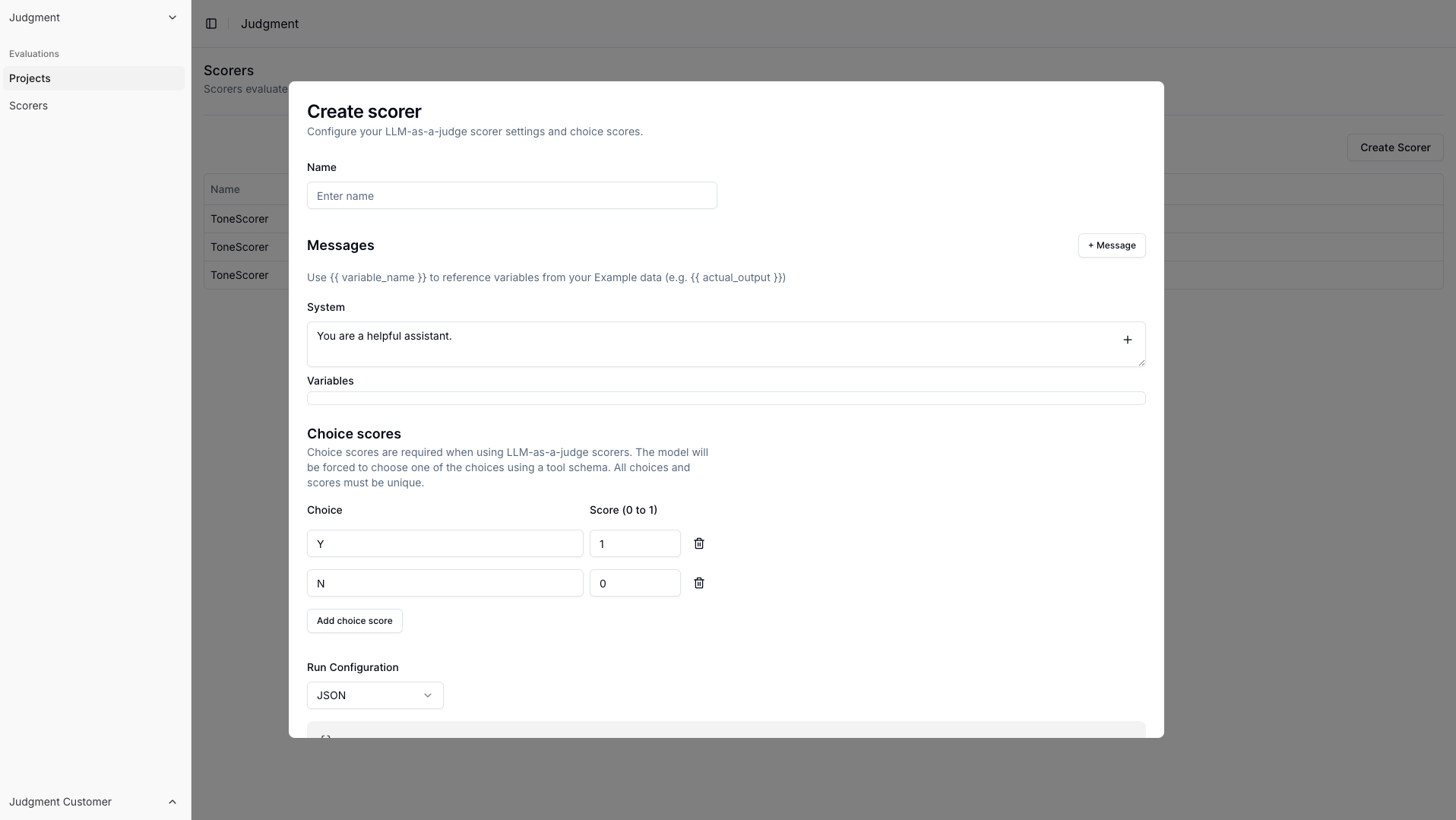

Evaluation

Experiment and optimize using Judgeval's evaluations.

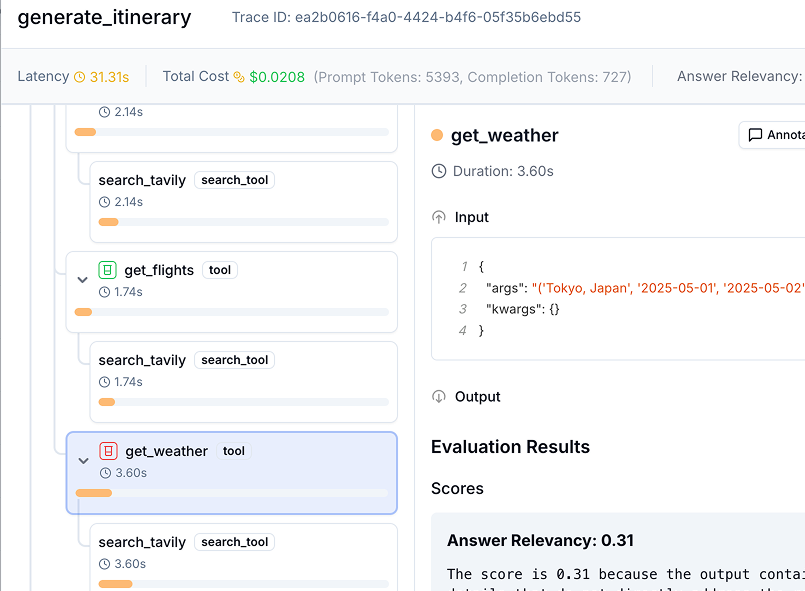

Monitoring

Trace, iterate and compare using Judgeval's flexible monitoring system.

Insights

Gain insight from your agent's traces and evaluation data to drive improvements.