---

You are an expert in helping users integrate Judgment with their codebase. When you are helping someone integrate Judgment tracing or evaluations with their agents/workflows, refer to this file.

---

# Agent Rules

URL: /documentation/agent-rules

Integrate Judgment seamlessly with Claude Code and Cursor

***

title: "Agent Rules"

description: "Integrate Judgment seamlessly with Claude Code and Cursor"

------------------------------------------------------------------------

Add Judgment context to your AI code editor so it can help you implement tracing, evaluations, and monitoring correctly.

## Quick Setup

**Add to global rules (recommended):**

```bash

curl https://docs.judgmentlabs.ai/agent-rules.md -o ~/.claude/CLAUDE.md

```

**Or add to project-specific rules:**

```bash

curl https://docs.judgmentlabs.ai/agent-rules.md -o CLAUDE.md

```

```bash

mkdir -p .cursor/rules

curl https://docs.judgmentlabs.ai/agent-rules.md -o .cursor/rules/judgment.mdc

```

After adding rules, your AI assistant will understand Judgment's APIs and best practices.

## What This Enables

Your AI code editor will automatically:

* Use correct Judgment SDK patterns

* Implement tracing decorators properly

* Configure evaluations with appropriate scorers

* Follow multi-agent system conventions

## Manual Setup

[View the full rules file](/agent-rules.md) to copy and paste manually.

# Security & Compliance

URL: /documentation/compliance

***

## title: Security & Compliance

At Judgment Labs, we take security and compliance seriously. We maintain rigorous standards to protect our customers' data and ensure the highest level of service reliability.

## SOC 2 Compliance

### Type 2 Certification

We have successfully completed our SOC 2 Type 2 audit, demonstrating our commitment to meeting rigorous security, availability, and confidentiality standards. This comprehensive certification validates the operational effectiveness of our security controls over an extended period, ensuring consistent adherence to security protocols.

Our SOC 2 Type 2 compliance covers the following trust service criteria:

* **Security**: Protection of system resources against unauthorized access

* **Availability**: System accessibility for operation and use as committed

* **Confidentiality**: Protection of confidential information as committed

View our [SOC 2 Type 2 Report](https://app.delve.co/judgment-labs) through our compliance portal.

## HIPAA Compliance

We maintain HIPAA compliance to ensure the security and privacy of protected health information (PHI). Our infrastructure and processes are designed to meet HIPAA's strict requirements for:

* Data encryption

* Access controls

* Audit logging

* Data backup and recovery

* Security incident handling

Access our [HIPAA Compliance Report](https://app.delve.co/judgment-labs) through our compliance portal. If you're working with healthcare data, please contact our team at [contact@judgmentlabs.ai](mailto:contact@judgmentlabs.ai) to discuss your specific compliance needs.

## Security Framework

We operate under a shared responsibility model where Judgment Labs secures:

* **Application Layer**: Secure coding practices, vulnerability management, and application-level controls

* **Platform Layer**: Infrastructure security, access controls, and monitoring

* **Data Protection**: Encryption at rest and in transit, secure data handling, and privacy controls

## Trust & Transparency

### Compliance Portal

All compliance documentation, certifications, and security reports are available through our dedicated [Trust Center](https://app.delve.co/judgment-labs). This portal provides:

* Current compliance certifications

* Security assessment reports

* Third-party audit documentation

* Data processing agreements

### Data Processing Agreement (DPA)

Our Data Processing Agreement outlines the specific terms and conditions for how we process and protect your data. The DPA covers:

* Data processing purposes and legal basis

* Data subject rights and obligations

* Security measures and incident response

* International data transfers

* Sub-processor agreements

Review our [Data Processing Agreement](https://app.delve.co/judgment-labs/dpa) for detailed terms and conditions regarding data processing activities.

### Contact Information

For security-related inquiries:

* **General Security Questions**: [contact@judgmentlabs.ai](mailto:contact@judgmentlabs.ai)

* **Compliance Documentation**: Request access through our [Trust Center](https://app.delve.co/judgment-labs)

* **HIPAA Inquiries**: For healthcare data requirements, contact [support@judgmentlabs.ai](mailto:support@judgmentlabs.ai)

* **DPA Requests**: For Data Processing Agreement execution, contact [legal@judgmentlabs.ai](mailto:legal@judgmentlabs.ai)

## Our Commitment

Our security and compliance certifications demonstrate our commitment to:

* **Data Protection**: Industry-leading encryption and access controls

* **System Availability**: 99.9% uptime commitment with redundant infrastructure

* **Process Integrity**: Audited security controls and continuous monitoring

* **Privacy by Design**: Built-in privacy protections and data minimization

* **Regulatory Compliance**: Adherence to GDPR, HIPAA, and industry standards

# Get Started

URL: /documentation

***

title: Get Started

icon: FastForward

-----------------

import { KeyRound, FastForward } from 'lucide-react'

[`judgeval`](https://github.com/judgmentlabs/judgeval) is an Agent Behavior Monitoring (ABM) library that helps track and judge any agent behavior in online and offline environments.

`judgeval` also enables error analysis on agent trajectories and groups trajectories by behavior and topic for deeper analysis.

judgeval is built and maintained by [Judgment Labs](https://judgmentlabs.ai). You can follow our latest updates via [GitHub](https://github.com/judgmentlabs/judgeval).

## Get Running in Under 2 Minutes

### Install Judgeval

```bash

uv add judgeval

```

```bash

pip install judgeval

```

### Get your API keys

Head to the [Judgment Platform](https://app.judgmentlabs.ai/register) and create an account. Then, copy your API key and Organization ID and set them as environment variables.

Get your free API keys} href="https://app.judgmentlabs.ai/register" icon={} external>

You get 50,000 free trace spans and 1,000 free evals each month. No credit card required.

```bash

export JUDGMENT_API_KEY="your_key_here"

export JUDGMENT_ORG_ID="your_org_id_here"

```

```bash

# Add to your .env file

JUDGMENT_API_KEY="your_key_here"

JUDGMENT_ORG_ID="your_org_id_here"

```

### Monitor your Agents' Behavior in Production

Online behavioral monitoring lets you run scorers directly on your agents in production. The instant an agent misbehaves, engineers can be alerted to push a hotfix before customers are affected.

Our server-hosted scorers run in secure Firecracker microVMs with zero impact on your application latency.

**Create a Behavior Scorer**

First, create a hosted behavior scorer that runs securely in the cloud:

```py title="helpfulness_scorer.py"

from judgeval.data import Example

from judgeval.scorers.example_scorer import ExampleScorer

# Define custom example class with any fields you want to expose to the scorer

class QuestionAnswer(Example):

question: str

answer: str

# Define a server-hosted custom scorer

class HelpfulnessScorer(ExampleScorer):

name: str = "Helpfulness Scorer"

server_hosted: bool = True # Enable server hosting

async def a_score_example(self, example: QuestionAnswer):

# Custom scoring logic for agent behavior

# Can be an arbitrary combination of code and LLM calls

if len(example.answer) > 10 and "?" not in example.answer:

self.reason = "Answer is detailed and provides helpful information"

return 1.0

else:

self.reason = "Answer is too brief or unclear"

return 0.0

```

**Upload your Scorer**

Deploy your scorer to our secure infrastructure:

```bash

echo "pydantic" > requirements.txt

uv run judgeval upload_scorer helpfulness_scorer.py requirements.txt

```

```bash

echo "pydantic" > requirements.txt

judgeval upload_scorer helpfulness_scorer.py requirements.txt

```

```bash title="Terminal Output"

2025-09-27 17:54:06 - judgeval - INFO - Auto-detected scorer name: 'Helpfulness Scorer'

2025-09-27 17:54:08 - judgeval - INFO - Successfully uploaded custom scorer: Helpfulness Scorer

```

**Monitor Your Agent Using Custom Scorers**

Now instrument your agent with tracing and online evaluation:

**Note:** This example uses OpenAI. Make sure you have `OPENAI_API_KEY` set in your environment variables before running.

```py title="monitor.py"

from openai import OpenAI

from judgeval.tracer import Tracer, wrap

from helpfulness_scorer import HelpfulnessScorer, QuestionAnswer

# [!code ++:2]

judgment = Tracer(project_name="default_project") # organizes traces

client = wrap(OpenAI()) # tracks all LLM calls

@judgment.observe(span_type="tool") # [!code ++]

def format_task(question: str) -> str:

return f"Please answer the following question: {question}"

@judgment.observe(span_type="tool") # [!code ++]

def answer_question(prompt: str) -> str:

response = client.chat.completions.create(

model="gpt-4.1",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

@judgment.observe(span_type="function") # [!code ++]

def run_agent(question: str) -> str:

task = format_task(question)

answer = answer_question(task)

# [!code ++:6]

# Add online evaluation with server-hosted scorer

judgment.async_evaluate(

scorer=HelpfulnessScorer(),

example=QuestionAnswer(question=question, answer=answer),

sampling_rate=0.9 # Evaluate 90% of agent runs

)

return answer

if __name__ == "__main__":

result = run_agent("What is the capital of the United States?")

print(result)

```

Congratulations! You've just created your first trace with production monitoring. It should look like this:

**Key Benefits:**

* **`@judgment.observe()`** captures all agent interactions

* **`judgment.async_evaluate()`** runs hosted scorers with zero latency impact

* **`sampling_rate`** controls behavior scoring frequency (0.9 = 90% of agent runs)

You can instrument [Agent Behavioral Monitoring (ABM)](/documentation/performance/online-evals) on agents to [alert](/documentation/performance/alerts) when agents are misbehaving in production.

View the [alerts docs](/documentation/performance/alerts) for more information.

### Regression test your Agents

Judgeval enables you to use agent-specific behavior rubrics as regression tests in your CI pipelines to stress-test agent behavior before your agent deploys into production.

You can run evals on predefined test examples with any of your own [custom scorers](/documentation/evaluation/scorers/custom-scorers).

Evals produce a score for each example. You can run multiple scorers on the same example to score different aspects of quality.

```py title="eval.py"

from judgeval import JudgmentClient

from judgeval.data import Example

from judgeval.scorers.example_scorer import ExampleScorer

client = JudgmentClient()

class CorrectnessExample(Example):

question: str

answer: str

class CorrectnessScorer(ExampleScorer):

name: str = "Correctness Scorer"

async def a_score_example(self, example: CorrectnessExample) -> float:

# Replace this logic with your own scoring logic

if "Washington, D.C." in example.answer:

self.reason = "The answer is correct because it contains 'Washington, D.C.'."

return 1.0

self.reason = "The answer is incorrect because it contains 'Washington, D.C.'."

return 0.0

example = CorrectnessExample(

question="What is the capital of the United States?", # Question to your agent (input to your agent!)

answer="The capital of the U.S. is Washington, D.C.", # Output from your agent (invoke your agent here!)

)

client.run_evaluation(

examples=[example],

scorers=[CorrectnessScorer()],

project_name="default_project",

)

```

Your test should have passed! Let's break down what happened.

* `question` and `answer{:py}` represent the question from the user and answer from the agent.

* `CorrectnessScorer(){:py}` is a custom-defined scorer that statically checks if the output contains the correct answer. This scorer can be arbitrarily defined in code, including LLM-as-a-judge and any dependencies you'd like! See examples [here](/documentation/evaluation/scorers/custom-scorers#implementation-example).

## Next Steps

Congratulations! You've just finished getting started with `judgeval` and the Judgment Platform.

Explore our features in more detail below:

Agentic Behavior Rubrics

Measure and optimize your agent along any behaviorial rubric, using techniques such as LLM-as-a-judge and human-aligned rubrics.

Agent Behavioral Monitoring (ABM)

Take action when your agents misbehave in production: alert your team, add failure cases to datasets for later optimization, and more.

# Getting Started with Self-Hosting

URL: /documentation/self-hosting

***

## title: Getting Started with Self-Hosting

Self-hosting Judgment Labs' platform is a great way to have full control over your LLM evaluation infrastructure. Instead of using our hosted platform, you can deploy your own instance of Judgment Labs' platform.

## Part 1: Infrastructure Skeleton Setup

Please have the following infrastructure set up:

1. A new/empty [AWS account](http://console.aws.amazon.com/) that you have admin access to: this will be used to host the self-hosted Judgment instance. Please write down the account ID.

2. A [Supabase](https://supabase.com/) organization that you have admin access to: this will be used to store and retrieve data for the self-hosted Judgment instance.

3. An available email address and the corresponding *app password* (see Tip below) for the email address (e.g. [no-reply@organization.com](mailto:no-reply@organization.com)). This email address will be used to send email invitations to users on the self-hosted instance.

Your app password is not your normal email password; learn about app passwords for [Gmail](https://support.google.com/mail/answer/185833?hl=en), [Outlook](https://support.microsoft.com/en-us/account-billing/how-to-get-and-use-app-passwords-5896ed9b-4263-e681-128a-a6f2979a7944), [Yahoo](https://help.yahoo.com/kb/SLN15241.html), [Zoho](https://help.zoho.com/portal/en/kb/bigin/channels/email/articles/generate-an-app-specific-password#What_is_TFA_Two_factor_Authentication), or [Fastmail](https://www.fastmail.help/hc/en-us/articles/360058752854-App-passwords)

Make sure to keep your AWS account ID and Supabase organization details secure and easily accessible, as you'll need them for the setup process.

## Part 2: Request Self-Hosting Access from Judgment Labs

Please contact us at [support@judgmentlabs.ai](mailto:support@judgmentlabs.ai) with the following information:

* The name of your organization

* An image of your organization's logo

* \[Optional] A subtitle for your organization

* Domain name for your self-hosted instance (e.g. api.organization.com) (can be any domain/subdomain name you own; this domain will be linked to your self-hosted instance as part of the setup process)

* The AWS account ID from Part 1

* Purpose of self-hosting

The domain name you provide must be one that you own and have control over, as you'll need to add DNS records during the setup process.

We will review your email request ASAP. Once approved, we will do the following:

1. Whitelist your AWS account ID to allow access to our Judgment ECR images.

2. Email you back with a backend Osiris API key that will be input as part of the setup process using the Judgment CLI (Part 3).

## Part 3: Install Judgment CLI

Make sure you have Python installed on your system before proceeding with the installation.

To install the Judgment CLI, follow these steps:

### Clone the repository

```bash

git clone https://github.com/JudgmentLabs/judgment-cli.git

```

### Navigate to the project directory

```bash

cd judgment-cli

```

### Set up a fresh Python virtual environment

Choose one of the following methods to set up your virtual environment:

```bash

python -m venv venv

source venv/bin/activate # On Windows, use: venv\Scripts\activate

```

```bash

pipenv shell

```

```bash

uv venv

source .venv/bin/activate # On Windows, use: .venv\Scripts\activate

```

### Install the package

```bash

pip install -e .

```

```bash

pipenv install -e .

```

```bash

uv pip install -e .

```

### Verifying the Installation

To verify that the CLI was installed correctly, run:

```bash

judgment --help

```

You should see a list of available commands and their descriptions.

### Available Commands

The Judgment CLI provides the following commands:

#### Self-Hosting Commands

| Command | Description |

| ----------------------------------- | ------------------------------------------------------------------------------------ |

| `judgment self-host main` | Deploy a self-hosted instance of Judgment (and optionally set up the HTTPS listener) |

| `judgment self-host https-listener` | Set up the HTTPS listener for a self-hosted Judgment instance |

## Part 4: Set Up Prerequisites

### AWS CLI Setup

You'll need to install and configure AWS CLI with the AWS account from Part 1.

```bash

brew install awscli

```

```text

Download and run the installer from https://awscli.amazonaws.com/AWSCLIV2.msi

```

```bash

sudo apt install awscli

```

After installation, configure your local environment with the relevant AWS credentials:

```bash

aws configure

```

### Terraform CLI Setup

Terraform CLI is required for deploying the AWS infrastructure.

```bash

brew tap hashicorp/tap

brew install hashicorp/tap/terraform

```

```bash

choco install terraform

```

```text

Follow instructions https://developer.hashicorp.com/terraform/tutorials/aws-get-started/install-cli

```

## Part 5: Deploy Your Self-Hosted Environment

During the setup process, `.tfstate` files will be generated by Terraform.

These files keep track of the state of the infrastructure deployed by Terraform.

**DO NOT DELETE THESE FILES.**

**Create a credentials file (e.g., `creds.json`) with the following format:**

```json title="creds.json"

{

"supabase_token": "your_supabase_personal_access_token_here",

"org_id": "your_supabase_organization_id_here",

"db_password": "your_desired_supabase_database_password_here",

"invitation_sender_email": "email_address_to_send_org_invitations_from",

"invitation_sender_app_password": "app_password_for_invitation_sender_email",

"osiris_api_key": "your_osiris_api_key_here (optional)",

"openai_api_key": "your_openai_api_key_here (optional)",

"togetherai_api_key": "your_togetherai_api_key_here (optional)",

"anthropic_api_key": "your_anthropic_api_key_here (optional)"

}

```

**For `supabase_token`:** To retrieve your Supabase personal access token, you can either use an existing one or generate a new one [here](https://supabase.com/dashboard/account/tokens).

**For `org_id`:** You can retrieve it from the URL of your Supabase dashboard (make sure you have the correct organization selected in the top left corner, such as `Test Org` in the image below).

For example, if your organization URL is `https://supabase.com/dashboard/org/uwqswwrmmkxgrkfjkdex`, then your `org_id` is `uwqswwrmmkxgrkfjkdex`.

**For `db_password`:** This can be any password of your choice. It is necessary for creating the Supabase project and can be used later to directly [connect to the project database](https://supabase.com/docs/guides/database/connecting-to-postgres).

**For `invitation_sender_email` and `invitation_sender_app_password`:** These are required because the only way to add users to the self-hosted Judgment instance is via email invitations.

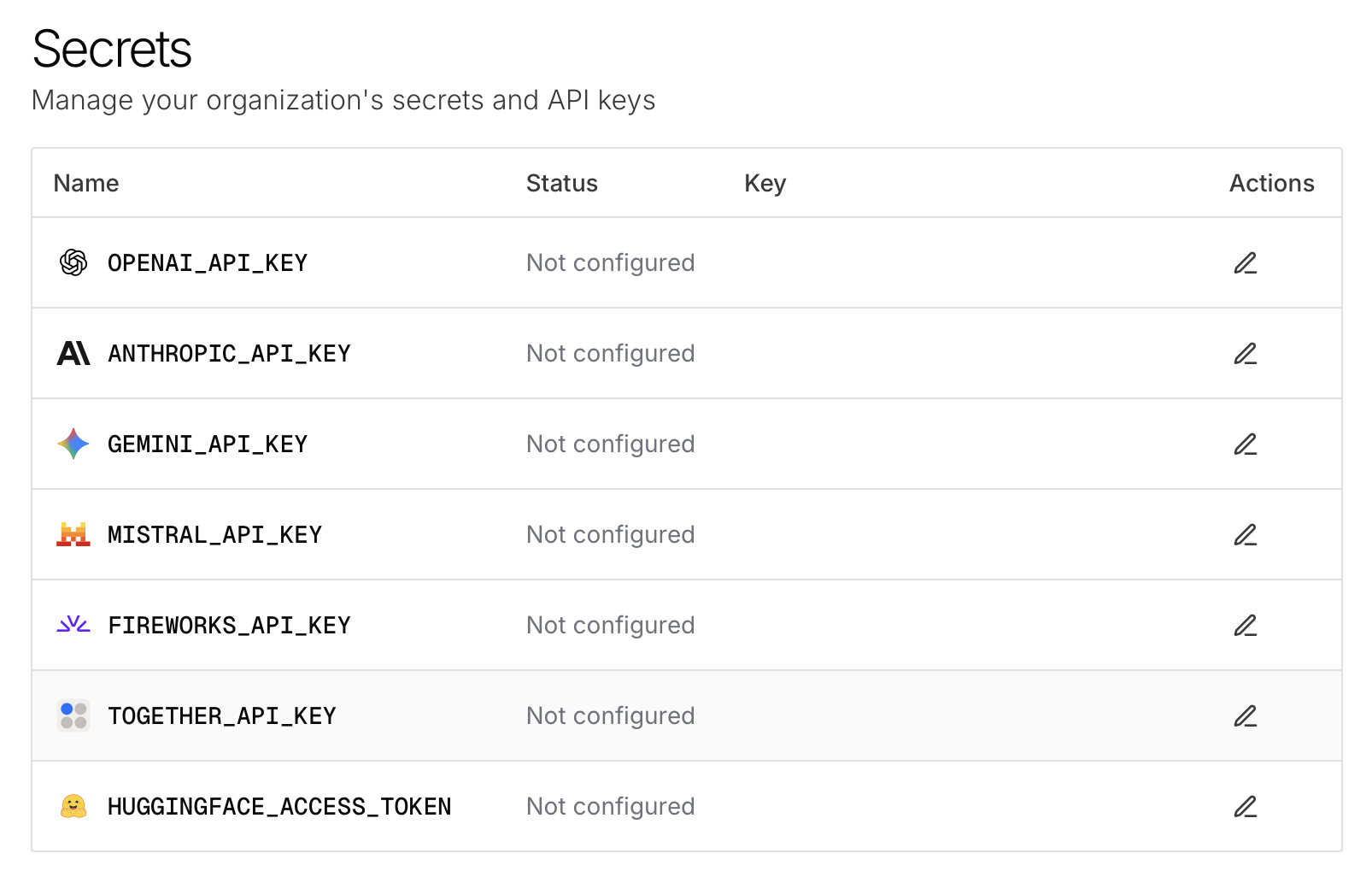

**For LLM API keys:** The four LLM API keys are optional. If you are not planning to run evaluations with the models that require any of these API keys, you do not need to specify them.

**Run the main self-host command. The command syntax is:**

```bash

judgment self-host main [OPTIONS]

```

**Required options:**

* `--root-judgment-email` or `-e`: Email address for the root Judgment user

* `--root-judgment-password` or `-p`: Password for the root Judgment user

* `--domain-name` or `-d`: Domain name to request SSL certificate for (make sure you own this domain)

**Optional options:**

For `--supabase-compute-size`, only "nano" is available on the free tier of Supabase. If you want to use a larger size, you will need to upgrade your organization to a paid plan.

* `--creds-file` or `-c`: Path to credentials file (default: creds.json)

* `--supabase-compute-size` or `-s`: Size of the Supabase compute instance (default: small)

* Available sizes: nano, micro, small, medium, large, xlarge, 2xlarge, 4xlarge, 8xlarge, 12xlarge, 16xlarge

* `--invitation-email-service` or `-i`: Email service for sending organization invitations (default: gmail)

* Available services: gmail, outlook, yahoo, zoho, fastmail

**Example usage:**

```bash

judgment self-host main \

--root-judgment-email root@example.com \

--root-judgment-password password \

--domain-name api.example.com \

--creds-file creds.json \

--supabase-compute-size nano \

--invitation-email-service gmail

```

**This command will:**

1. Create a new Supabase project

2. Create a root Judgment user in the self-hosted environment with the email and password provided

3. Deploy the Judgment AWS infrastructure using Terraform

4. Configure the AWS infrastructure to communicate with the new Supabase database

5. \* Request an SSL certificate from AWS Certificate Manager for the domain name provided

6. \*\* Optionally wait for the certificate to be issued and set up the HTTPS listener

\*For the certificate to be issued, this command will return two DNS records that must be manually added to your DNS registrar/service.

\*\*You will be prompted to either continue with the HTTPS listener setup now or to come back later. If you choose to proceed with the setup now, the program will wait for the certificate to be issued before continuing.

### Setting up the HTTPS listener

This step is optional; you can choose to have the HTTPS listener setup done as part of the main self-host command.

This command will only work after `judgment self-host main` has already been run AND the ACM certificate has been issued. To accomplish this:

1. Add the two DNS records returned by the main self-host command to your DNS registrar/service

2. Monitor the ACM console [here](https://console.aws.amazon.com/acm/home) until the certificate has status 'Issued'

To set up the HTTPS listener, run:

```bash

judgment self-host https-listener

```

This command will:

1. Set up the HTTPS listener with the certificate issued by AWS Certificate Manager

2. Return the url to the HTTPS-enabled domain which now points to your self-hosted Judgment server

## Part 6: Accessing Your Self-Hosted Environment

Your self-hosted Judgment API URL (referenced as `self_hosted_judgment_api_url` in this section) should be in the format `https://{self_hosted_judgment_domain}` (e.g. `https://api.organization.com`).

### From the Judgeval SDK

You can access your self-hosted instance by setting the following environment variables:

```

JUDGMENT_API_URL = "self_hosted_judgment_api_url"

JUDGMENT_API_KEY = "your_api_key"

JUDGMENT_ORG_ID = "your_org_id"

```

Afterwards, Judgeval can be used as you normally would.

### From the Judgment platform website

Visit the url `https://app.judgmentlabs.ai/login?api_url={self_hosted_judgment_api_url}` to login to your self-hosted instance. Your self-hosted Judgment API URL will be whitelisted when we review your request from Part 2.

You should be able to log in with the root user you configured during the setup process (`--root-judgment-email` and `--root-judgment-password` from the `self-host main` command).

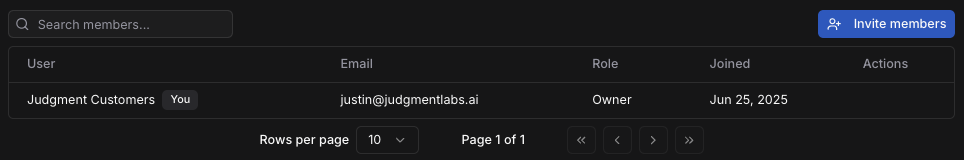

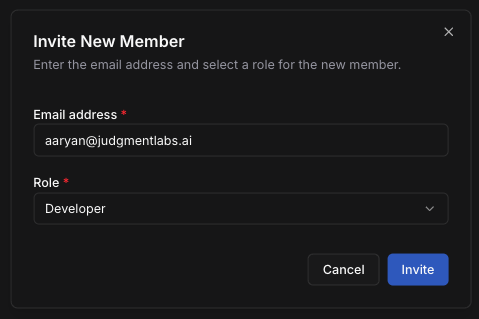

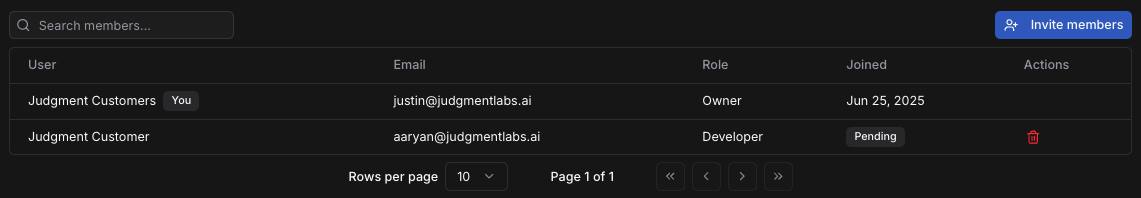

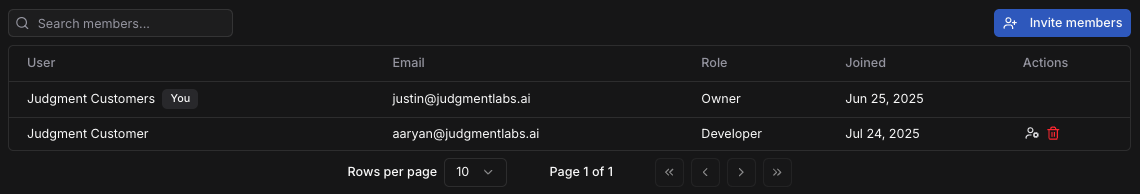

#### Adding more users to the self-hosted instance

For security reasons, users cannot register themselves on the self-hosted instance. Instead, you can add new users via email invitations to organizations.

To add a new user, make sure you're currently in the workspace/organization you want to add the new user to. Then, visit the [workspace member settings](https://app.judgmentlabs.ai/app/settings/members) and click the "Invite User" button. This process will send an email invitation to the new user to join the organization.

# Interactive Demo

URL: /interactive-demo

Try out our AI-powered research agent with Judgeval tracing

***

title: Interactive Demo

description: Try out our AI-powered research agent with Judgeval tracing

full: true

----------

### Create an account

To view the detailed traces from your conversations, create a [Judgment Labs](https://app.judgmentlabs.ai/register) account.

### Start a conversation

This demo shows you both sides of AI agent interactions: the conversation **and** the detailed traces showing how judgeval traces your agent runs.

Chat with our AI research agent below. Ask it to research any topic, analyze data, or answer complex questions.

# Dataset

URL: /sdk-reference/dataset

Dataset class for managing datasets of Examples and Traces in Judgeval

***

title: Dataset

description: Dataset class for managing datasets of Examples and Traces in Judgeval

-----------------------------------------------------------------------------------

## Overview

The `Dataset` class provides both methods for dataset operations and serves as the return type for dataset instances. When you call `Dataset.create()` or `Dataset.get()`, you receive a `Dataset` instance with additional methods for managing the dataset's contents.

## Quick Start Example

```python

from judgeval.dataset import Dataset

from judgeval.data import Example

dataset = Dataset.create(

name="qa_dataset",

project_name="default_project",

examples=[Example(input="What is the powerhouse of the cell?", actual_output="The mitochondria.")]

)

dataset = Dataset.get(

name="qa_dataset",

project_name="default_project",

)

examples = []

example = Example(input="Sample question?", output="Sample answer.")

examples.append(example)

dataset.add_examples(examples=examples)

```

## Dataset Creation & Retrieval

### `Dataset.create(){:py}`

Create a new evaluation dataset for storage and reuse across multiple evaluation runs. Note this command pushes the dataset to the Judgment platform.

#### `name` \[!toc]

Name of the dataset

```py

"qa_dataset"

```

#### `project_name` \[!toc]

Name of the project

```py

"question_answering"

```

#### `examples` \[!toc]

List of examples to include in the dataset. See [Example](/sdk-reference/data-types/core-types#example) for details on the structure.

```py

[Example(input="...", actual_output="...")]

```

#### `traces` \[!toc]

List of traces to include in the dataset. See [Trace](/sdk-reference/data-types/core-types#trace) for details on the structure.

```py

[Trace(...)]

```

#### `overwrite` \[!toc]

Whether to overwrite an existing dataset with the same name.

#### Returns \[!toc]

A `Dataset` instance for further operations

### `JudgmentAPIError` \[!toc]

Raised when a dataset with the same name already exists in the project and `overwrite=False`. See [JudgmentAPIError](/sdk-reference/data-types/response-types#judgmentapierror) for details.

```py title="dataset.py"

from judgeval.dataset import Dataset

from judgeval.data import Example

dataset = Dataset.create(

name="qa_dataset",

project_name="default_project",

examples=[Example(input="What is the powerhouse of the cell?", actual_output="The mitochondria.")]

)

```

### `Dataset.get(){:py}`

Retrieve a dataset from the Judgment platform by its name and project name.

#### `name` \[!toc]

The name of the dataset to retrieve.

```py

"my_dataset"

```

#### `project_name` \[!toc]

The name of the project where the dataset is stored.

```py

"default_project"

```

#### Returns \[!toc]

A `Dataset` instance for further operations

```py title="retrieve_dataset.py"

from judgeval.dataset import Dataset

dataset = Dataset.get(

name="qa_dataset",

project_name="default_project",

)

print(dataset.examples)

```

## Dataset Management

Once you have a `Dataset` instance (from `Dataset.create()` or `Dataset.get()`), you can use these methods to manage its contents:

> **Note:** All instance methods automatically update the dataset and push changes to the Judgment platform.

### `dataset.add_examples(){:py}`

Add examples to the dataset.

#### `examples` \[!toc]

List of examples to add to the dataset.

#### Returns \[!toc]

`True` if examples were added successfully

```py title="add_examples.py"

from judgeval.dataset import Dataset

from judgeval.data import Example

dataset = Dataset.get(

name="qa_dataset",

project_name="default_project",

)

example = Example(input="Sample question?", output="Sample answer.")

examples = [example]

dataset.add_examples(examples=examples)

```

## Dataset Properties

When you have a `Dataset` instance, it provides access to the following properties:

### `Dataset{:py}`

### `dataset.name` \[!toc]

**Type:** `str` (read-only)

The name of the dataset.

### `dataset.project_name` \[!toc]

**Type:** `str` (read-only)

The project name where the dataset is stored.

### `dataset.examples` \[!toc]

**Type:** `List[Example]` (read-only)

List of [examples](/sdk-reference/data-types/core-types#example) contained in the dataset.

### `dataset.traces` \[!toc]

**Type:** `List[Trace]` (read-only)

List of [traces](/sdk-reference/data-types/core-types#trace) contained in the dataset (if any).

### `dataset.id` \[!toc]

**Type:** `str` (read-only)

Unique identifier for the dataset on the Judgment platform.

# JudgmentClient

URL: /sdk-reference/judgment-client

Run evaluations with the JudgmentClient class to test for regressions and run A/B tests on your agents.

***

title: JudgmentClient

description: Run evaluations with the JudgmentClient class to test for regressions and run A/B tests on your agents.

--------------------------------------------------------------------------------------------------------------------

## Overview

The JudgmentClient is your primary interface for interacting with the Judgment platform. It provides methods for running evaluations, managing datasets, handling traces, and more.

## Authentication

Set up your credentials using environment variables:

```bash

export JUDGMENT_API_KEY="your_key_here"

export JUDGMENT_ORG_ID="your_org_id_here"

```

```bash

# Add to your .env file

JUDGMENT_API_KEY="your_key_here"

JUDGMENT_ORG_ID="your_org_id_here"

```

### `JudgmentClient(){:py}`

Initialize a `JudgmentClient{:py}` object.

### `api_key` \[!toc]

Your Judgment API key. **Recommended:** Set using the `JUDGMENT_API_KEY` environment variable instead of passing directly.

### `organization_id` \[!toc]

Your organization ID. **Recommended:** Set using the `JUDGMENT_ORG_ID` environment variable instead of passing directly.

```py title="judgment_client.py"

from judgeval import JudgmentClient

import os

from dotenv import load_dotenv

load_dotenv() # Load environment variables from .env file

# Automatically uses JUDGMENT_API_KEY and JUDGMENT_ORG_ID from environment

client = JudgmentClient()

# Manually pass in API key and Organization ID

client = JudgmentClient(

api_key=os.getenv('JUDGMENT_API_KEY'),

organization_id=os.getenv("JUDGMENT_ORG_ID")

)

```

***

### `client.run_evaluation(){:py}`

Execute an evaluation of examples using one or more scorers to measure performance and quality of your AI models.

### `examples` \[!toc]

List of [Example](/sdk-reference/data-types/core-types#example) objects (or any class inheriting from Example) containing inputs, outputs, and metadata to evaluate against your agents

```py

[Example(...)]

```

### `scorers` \[!toc]

List of scorers to use for evaluation, such as `PromptScorer`, `CustomScorer`, or any custom defined [ExampleScorer](/sdk-reference/data-types/core-types#examplescorer)

```py

[ExampleScorer(...)]

```

### `model` \[!toc]

Model used as judge when using LLM as a Judge

```py

"gpt-5"

```

### `project_name` \[!toc]

Name of the project for organization

```py

"my_qa_project"

```

### `eval_run_name` \[!toc]

Name for the evaluation run

```py

"experiment_v1"

```

### `assert_test` \[!toc]

Runs evaluations as unit tests, raising an exception if the score falls below the defined threshold.

```py

"True"

```

```py title="resolution.py"

from judgeval import JudgmentClient

from judgeval.data import Example

from judgeval.scorers.example_scorer import ExampleScorer

client = JudgmentClient()

class CustomerRequest(Example):

request: str

response: str

class ResolutionScorer(ExampleScorer):

name: str = "Resolution Scorer"

async def a_score_example(self, example: CustomerRequest):

# Replace this logic with your own scoring logic

if "package" in example.response:

self.reason = "The response contains the word 'package'"

return 1

else:

self.reason = "The response does not contain the word 'package'"

return 0

example = CustomerRequest(request="Where is my package?", response="Your package will arrive tomorrow at 10:00 AM.")

res = client.run_evaluation(

examples=[example],

scorers=[ResolutionScorer()],

project_name="default_project",

)

# Example with a failing test using assert_test=True

# This will raise an error because the response does not contain the word "package"

try:

example = CustomerRequest(request="Where is my package?", response="Empty response.")

client.run_evaluation(

examples=[example],

scorers=[ResolutionScorer()],

project_name="default_project",

assert_test=True, # This will raise an error if any test fails

)

except Exception as e:

print(f"Test assertion failed: {e}")

```

A list of `ScoringResult{:py}` objects. See [Return Types](#return-types) for detailed structure.

```py

[

ScoringResult(

success=False,

scorers_data=[ScorerData(...)],

name=None,

data_object=Example(...),

trace_id=None,

run_duration=None,

evaluation_cost=None

)

]

```

## Return Types

### `ScoringResult`

The `ScoringResult{:py}` object contains the evaluation output of one or more scorers applied to a single example.

| Attribute |

Type |

Description |

| success |

bool |

Whether all scorers applied to this example succeeded |

| scorers\_data |

List\[ScorerData] |

Individual scorer results and metadata |

| data\_object |

Example |

The original example object that was evaluated |

| run\_duration |

Optional\[float] |

Time taken to complete the evaluation |

| trace\_id |

Optional\[str] |

Associated trace ID for trace-based evaluations |

| evaluation\_cost |

Optional\[float] |

Cost of the evaluation in USD |

### `ScorerData`

Each `ScorerData{:py}` object within `scorers_data{:py}` contains the results from an individual scorer:

| Attribute |

Type |

Description |

| name |

str |

Name of the scorer |

| threshold |

float |

Threshold used for pass/fail determination |

| success |

bool |

Whether this scorer passed its threshold |

| score |

Optional\[float] |

Numerical score from the scorer |

| reason |

Optional\[str] |

Explanation for the score/decision |

| evaluation\_model |

Optional\[Union\[List\[str], str]] |

Model(s) used for evaluation |

| error |

Optional\[str] |

Error message if scoring failed |

```py title="accessing_results.py"

# Example of accessing ScoringResult data

results = client.run_evaluation(examples, scorers)

for result in results:

print(f"Overall success: {result.success}")

print(f"Example input: {result.data_object.input}")

for scorer_data in result.scorers_data:

print(f"Scorer '{scorer_data.name}': {scorer_data.score} (threshold: {scorer_data.threshold})")

if scorer_data.reason:

print(f"Reason: {scorer_data.reason}")

```

## Error Handling

The JudgmentClient raises specific exceptions for different error conditions:

### `JudgmentAPIError`

Raised when API requests fail or server errors occur

### `ValueError`

Raised when invalid parameters or configuration are provided

### `FileNotFoundError`

Raised when test files or datasets are missing

```py title="error_handling.py"

from judgeval import JudgmentClient

from judgeval.data import Example

from judgeval.scorers.example_scorer import ExampleScorer

from judgeval.exceptions import JudgmentAPIError

client = JudgmentClient()

class CustomerRequest(Example):

request: str

response: str

example = CustomerRequest(request="Where is my package?", response="Your package will arrive tomorrow at 10:00 AM.")

class ResolutionScorer(ExampleScorer):

name: str = "Resolution Scorer"

async def a_score_example(self, example: CustomerRequest):

# Replace this logic with your own scoring logic

if "package" in example.response:

self.reason = "The response contains the word 'package'"

return 1

else:

self.reason = "The response does not contain the word 'package'"

return 0

try:

res = client.run_evaluation(

examples=[example],

scorers=[ResolutionScorer()],

project_name="default_project",

)

except JudgmentAPIError as e:

print(f"API Error: {e}")

except ValueError as e:

print(f"Invalid parameters: {e}")

except FileNotFoundError as e:

print(f"File not found: {e}")

```

# PromptScorer

URL: /sdk-reference/prompt-scorer

Evaluate agent behavior based on a rubric you define and iterate on the platform.

***

title: PromptScorer

description: Evaluate agent behavior based on a rubric you define and iterate on the platform.

----------------------------------------------------------------------------------------------

## Overview

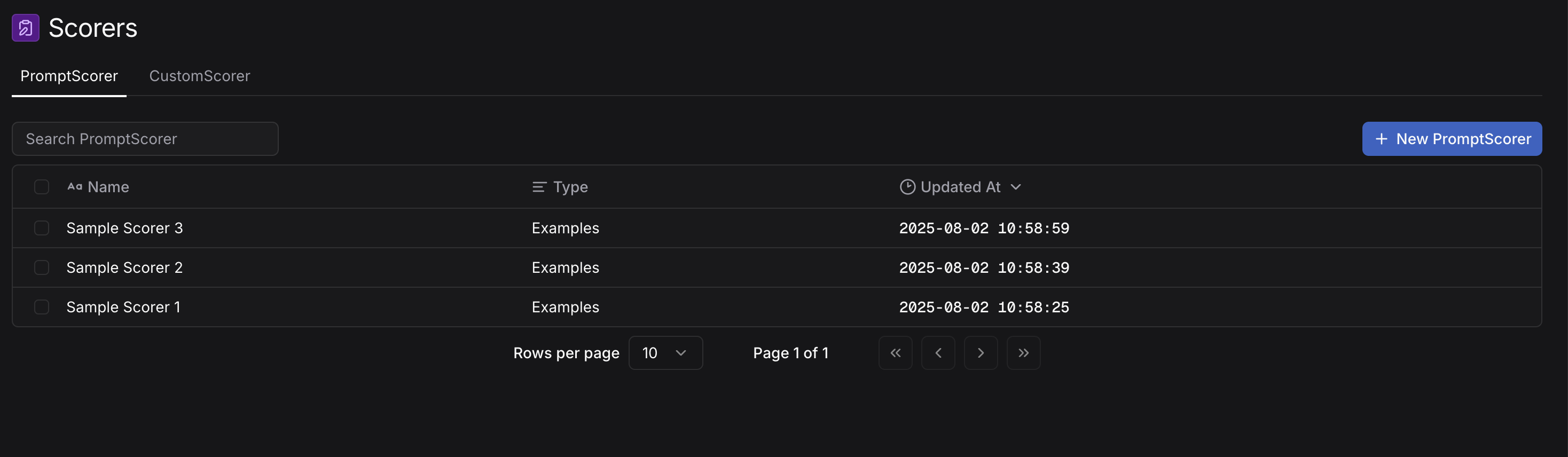

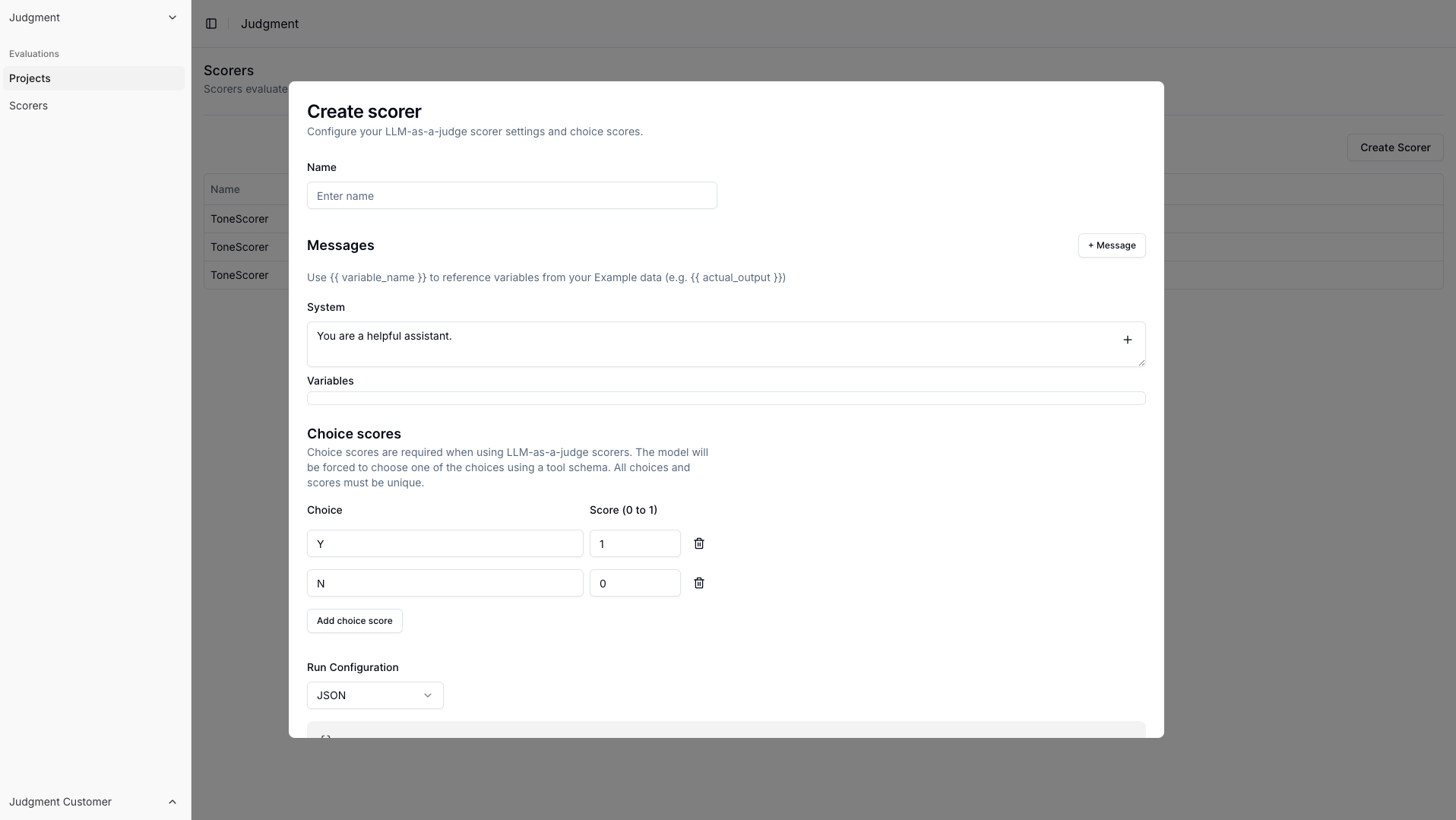

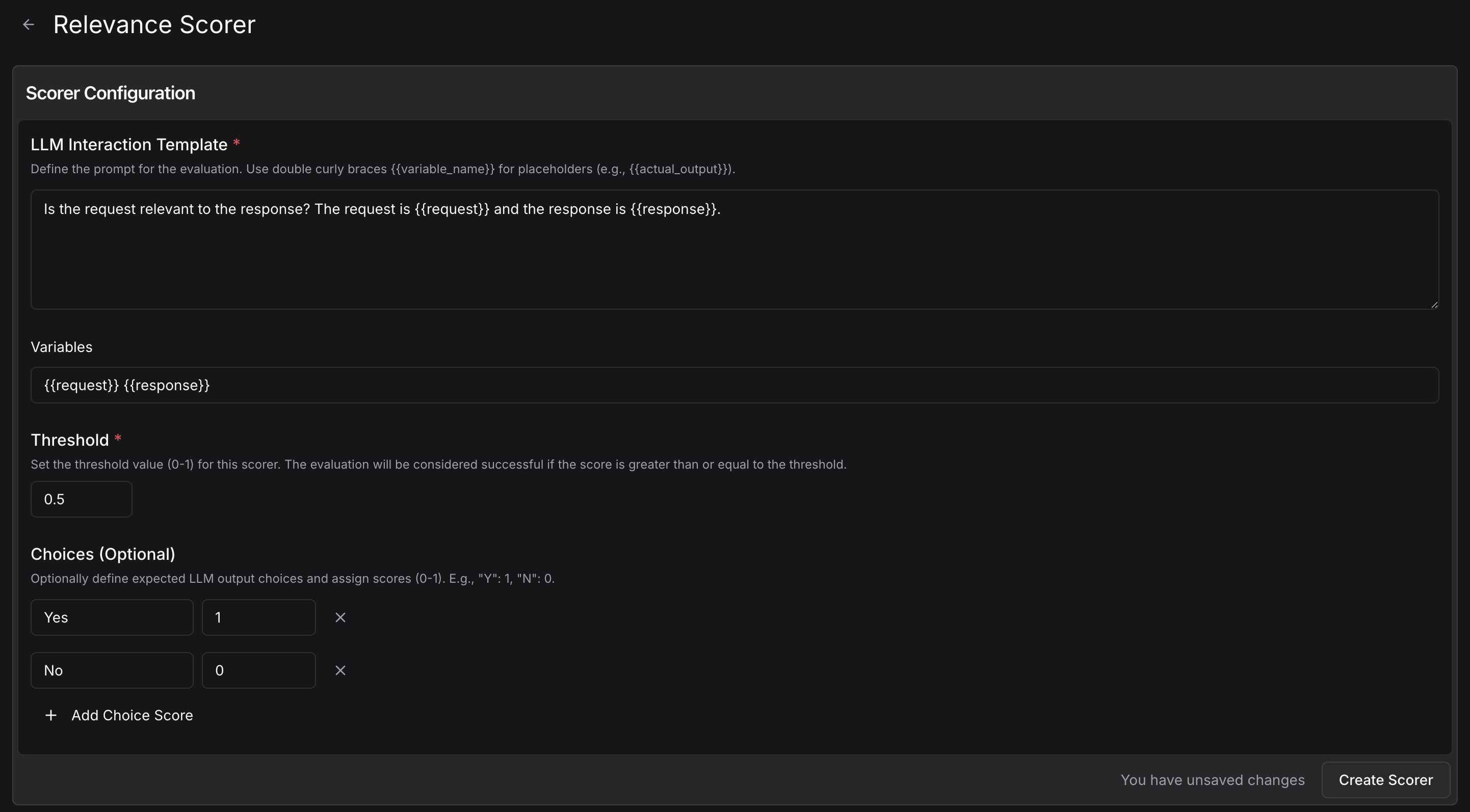

A `PromptScorer` is a powerful tool for evaluating your LLM system using use-case specific, natural language rubrics.

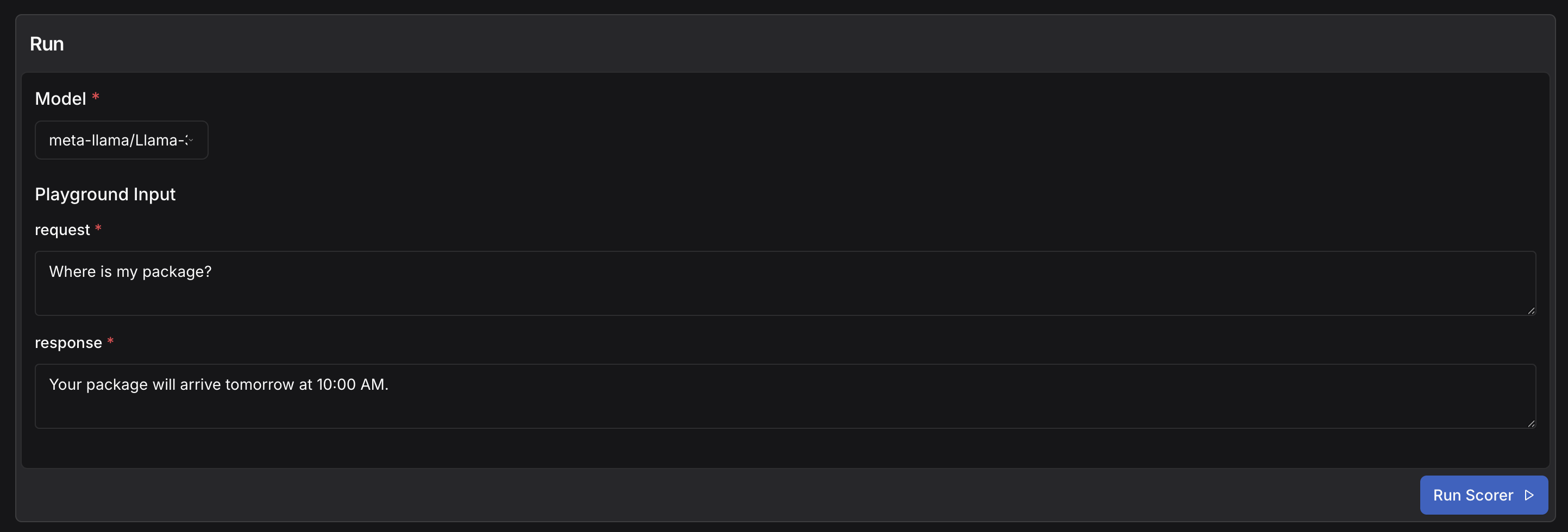

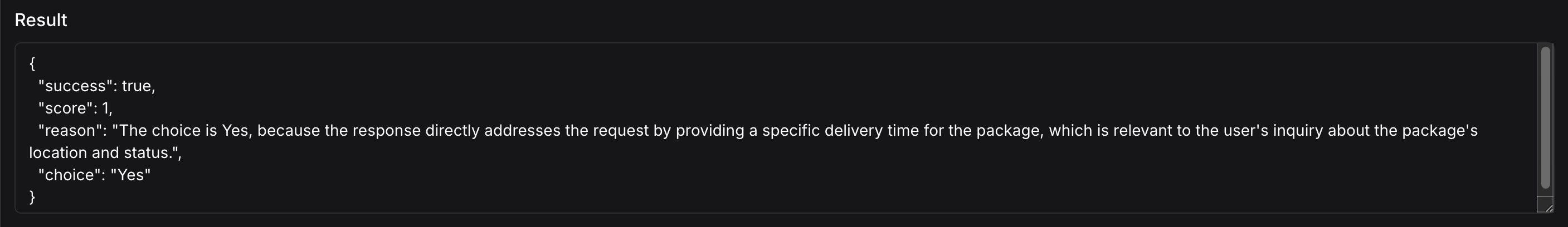

PromptScorer's make it easy to prototype your evaluation rubrics—you can easily set up a new criteria and test them on a few examples in the scorer playground, then evaluate your agents' behavior in production with real customer usage.

All PromptScorer methods automatically sync changes with the Judgment platform.

## Quick Start Example

```py title="create_and_use_prompt_scorer.py"

from openai import OpenAI

from judgeval.scorers import PromptScorer

from judgeval.tracer import Tracer, wrap

from judgeval.data import Example

# Initialize tracer

judgment = Tracer(

project_name="default_project"

)

# Auto-trace LLM calls

client = wrap(OpenAI())

# Initialize PromptScorer

scorer = PromptScorer.create(

name="PositivityScorer",

prompt="Is the response positive or negative? Question: {{input}}, response: {{actual_output}}",

options={"positive" : 1, "negative" : 0}

)

class QAAgent:

def __init__(self, client):

self.client = client

@judgment.observe(span_type="tool")

def process_query(self, query):

response = self.client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "system", "content": "You are a helpful assitant"},

{"role": "user", "content": f"I have a query: {query}"}]

) # Automatically traced

return f"Response: {response.choices[0].message.content}"

# Basic function tracing

@judgment.agent()

@judgment.observe(span_type="agent")

def invoke_agent(self, query):

result = self.process_query(query)

judgment.async_evaluate(

scorer=scorer,

example=Example(input=query, actual_output=result),

model="gpt-5"

)

return result

if __name__ == "__main__":

agent = QAAgent(client)

print(agent.invoke_agent("What is the capital of the United States?"))

```

## Authentication

Set up your credentials using environment variables:

```bash

export JUDGMENT_API_KEY="your_key_here"

export JUDGMENT_ORG_ID="your_org_id_here"

```

```bash

# Add to your .env file

JUDGMENT_API_KEY="your_key_here"

JUDGMENT_ORG_ID="your_org_id_here"

```

## **PromptScorer Creation & Retrieval**

## `PromptScorer.create()`/`TracePromptScorer.create(){:py}`

Initialize a `PromptScorer{:py}` or `TracePromptScorer{:py}` object.

### `name` \[!toc]

The name of the PromptScorer

### `prompt`\[!toc]

The prompt used by the LLM judge to make an evaluation

### `options`\[!toc]

If specified, the LLM judge will pick from one of the choices, and the score will be the one corresponding to the choice

### `judgment_api_key`\[!toc]

Recommended - set using the `JUDGMENT_API_KEY` environment variable

### `organization_id`\[!toc]

Recommended - set using the `JUDGMENT_ORG_ID` environment variable

#### Returns\[!toc]

A `PromptScorer` instance

```py title="create_prompt_scorer.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.create(

name="Test Scorer",

prompt="Is the response positive or negative? Response: {{actual_output}}",

options={"positive" : 1, "negative" : 0}

)

```

## `PromptScorer.get()`/`TracePromptScorer.get(){:py}`

Retrieve a `PromptScorer{:py}` or `TracePromptScorer{:py}` object that had already been created for the organization.

### `name`\[!toc]

The name of the PromptScorer you would like to retrieve

### `judgment_api_key`\[!toc]

Recommended - set using the `JUDGMENT_API_KEY` environment variable

### `organization_id`\[!toc]

Recommended - set using the `JUDGMENT_ORG_ID` environment variable

#### Returns\[!toc]

A `PromptScorer` instance

```py title="get_prompt_scorer.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

```

## **PromptScorer Management**

### `scorer.append_to_prompt(){:py}`

Add to the prompt for your PromptScorer

### `prompt_addition`\[!toc]

This string will be added to the existing prompt for the scorer.

#### Returns\[!toc]

None

```py title="append_to_prompt.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

scorer.append_to_prompt("Consider the overall tone, word choice, and emotional sentiment when making your determination.")

```

### `scorer.set_threshold(){:py}`

Update the threshold for your PromptScorer

### `threshold`\[!toc]

The new threshold you would like the PromptScorer to use

#### Returns\[!toc]

None

```py title="set_threshold.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

scorer.set_threshold(0.5)

```

### `scorer.set_prompt(){:py}`

Update the prompt for your PromptScorer

### `prompt`\[!toc]

The new prompt you would like the PromptScorer to use

#### Returns\[!toc]

None

```py title="set_prompt.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

scorer.set_prompt("Is the response helpful to the question? Question: {{input}}, response: {{actual_output}}")

```

### `scorer.set_options(){:py}`

Update the options for your PromptScorer

### `options`\[!toc]

The new options you would like the PromptScorer to use

#### Returns\[!toc]

None

```py title="set_options.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

scorer.set_options({"Yes" : 1, "No" : 0})

```

### `scorer.get_threshold(){:py}`

Retrieve the threshold for your PromptScorer

None

#### Returns\[!toc]

The threshold value for the PromptScorer

```py title="get_threshold.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

threshold = scorer.get_threshold()

```

### `scorer.get_prompt(){:py}`

Retrieve the prompt for your PromptScorer

None

#### Returns\[!toc]

The prompt string for the PromptScorer

```py title="get_prompt.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

prompt = scorer.get_prompt()

```

### `scorer.get_options(){:py}`

Retrieve the options for your PromptScorer

None

#### Returns\[!toc]

The options dictionary for the PromptScorer

```py title="get_options.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

options = scorer.get_options()

```

### `scorer.get_name(){:py}`

Retrieve the name for your PromptScorer

None

#### Returns\[!toc]

The name of the PromptScorer

```py title="get_name.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

name = scorer.get_name()

```

### `scorer.get_config(){:py}`

Retrieve the name, prompt, options, and threshold for your PromptScorer in a dictionary format

None

#### Returns\[!toc]

Dictionary containing the name, prompt, options, and threshold for the PromptScorer

```py title="get_config.py"

from judgeval.scorers import PromptScorer

scorer = PromptScorer.get(

name="Test Scorer"

)

config = scorer.get_config()

```

# Tracer

URL: /sdk-reference/tracing

Track agent behavior and evaluate performance in real-time with the Tracer class.

***

title: Tracer

description: Track agent behavior and evaluate performance in real-time with the Tracer class.

----------------------------------------------------------------------------------------------

## Overview

The `Tracer` class provides comprehensive observability for AI agents and LLM applications. It automatically captures execution traces, spans, and performance metrics while enabling real-time evaluation and monitoring through the Judgment platform.

The `Tracer` is implemented as a **singleton** - only one instance exists per application. Multiple `Tracer()` initializations will return the same instance. All tracing is built on **OpenTelemetry** standards, ensuring compatibility with the broader observability ecosystem.

## Quick Start Example

```python

from judgeval.tracer import Tracer, wrap

from openai import OpenAI

# Initialize tracer

judgment = Tracer(

project_name="default_project"

)

# Auto-trace LLM calls

client = wrap(OpenAI())

class QAAgent:

def __init__(self, client):

self.client = client

@judgment.observe(span_type="tool")

def process_query(self, query):

response = self.client.chat.completions.create(

model="gpt-5",

messages=[

{"role": "system", "content": "You are a helpful assitant"},

{"role": "user", "content": f"I have a query: {query}"}]

) # Automatically traced

return f"Response: {response.choices[0].message.content}"

# Basic function tracing

@judgment.agent()

@judgment.observe(span_type="agent")

def invoke_agent(self, query):

result = self.process_query(query)

return result

if __name__ == "__main__":

agent = QAAgent(client)

print(agent.invoke_agent("What is the capital of the United States?"))

```

## How Tracing Works

The Tracer automatically captures comprehensive execution data from your AI agents:

**Key Components:**

* **`@judgment.observe()`** captures all tool interactions, inputs, outputs, and execution time

* **`wrap(OpenAI())`** automatically tracks all LLM API calls including token usage and costs

* **`@judgment.agent()`** identifies which agent is responsible for each tool call in multi-agent systems

**What Gets Captured:**

* Tool usage and results

* LLM API calls (model, messages, tokens, costs)

* Function inputs and outputs

* Execution duration and hierarchy

* Error states and debugging information

**Automatic Monitoring:**

* All traced data flows to the Judgment platform in real-time

* Zero-latency impact on your application performance

* Comprehensive observability across your entire agent workflow

## Tracer Initialization

The Tracer is your primary interface for adding observability to your AI agents. It provides methods for tracing function execution, evaluating performance, and collecting comprehensive environment interaction data.

### `Tracer(){:py}`

Initialize a `Tracer{:py}` object.

#### `api_key` \[!toc]

Recommended - set using the `JUDGMENT_API_KEY` environment variable

#### `organization_id` \[!toc]

Recommended - set using the `JUDGMENT_ORG_ID` environment variable

#### `project_name` \[!toc]

Project name override

#### `enable_monitoring` \[!toc]

If you need to toggle monitoring on and off

#### `enable_evaluations` \[!toc]

If you need to toggle evaluations on and off for `async_evaluate(){:py}`

#### `resource_attributes` \[!toc]

OpenTelemetry resource attributes to attach to all spans. Resource attributes describe the entity producing the telemetry data (e.g., service name, version, environment). See the [OpenTelemetry Resource specification](https://opentelemetry.io/docs/specs/otel/configuration/sdk-environment-variables/) for standard attributes.

```py title="tracer.py"

from judgeval.tracer import Tracer

judgment = Tracer(

project_name="default_project"

)

@judgment.observe(span_type="function")

def answer_question(question: str) -> str:

answer = "The capital of the United States is Washington, D.C."

return answer

@judgment.observe(span_type="tool")

def process_request(question: str) -> str:

answer = answer_question(question)

return answer

if __name__ == "__main__":

print(process_request("What is the capital of the United States?"))

```

```py title="tracer_otel.py"

from judgeval.tracer import Tracer

from opentelemetry.sdk.trace import TracerProvider

tracer_provider = TracerProvider()

# Initialize tracer with OpenTelemetry configuration

judgment = Tracer(

project_name="default_project",

resource_attributes={

"service.name": "my-ai-agent",

"service.version": "1.2.0",

"deployment.environment": "production"

}

)

tracer_provider.add_span_processor(judgment.get_processor())

tracer = tracer_provider.get_tracer(__name__)

def answer_question(question: str) -> str:

with tracer.start_as_current_span("answer_question_span") as span:

span.set_attribute("question", question)

answer = "The capital of the United States is Washington, D.C."

span.set_attribute("answer", answer)

return answer

def process_request(question: str) -> str:

with tracer.start_as_current_span("process_request_span") as span:

span.set_attribute("input", question)

answer = answer_question(question)

span.set_attribute("output", answer)

return answer

if __name__ == "__main__":

print(process_request("What is the capital of the United States?"))

```

***

## Agent Tracking and Online Evals

### `@tracer.observe(){:py}`

Records an observation or output during a trace. This is useful for capturing intermediate steps, tool results, or decisions made by the agent. Optionally, provide a scorer config to run an evaluation on the trace.

#### `func` \[!toc]

The function to decorate (automatically provided when used as decorator)

#### `name` \[!toc]

Optional custom name for the span (defaults to function name)

```py

"custom_span_name"

```

#### `span_type` \[!toc]

Type of span to create. Available options:

* `"span"`: General span (default)

* `"tool"`: For functions that should be tracked and exported as agent tools

* `"function"`: For main functions or entry points

* `"llm"`: For language model calls (automatically applied to wrapped clients)

LLM clients wrapped using `wrap(){:py}` automatically use the `"llm"` span type without needing manual decoration.

```py

"tool" # or "function", "llm", "span"

```

#### `scorer_config`

Configuration for running an evaluation on the trace or sub-trace. When `scorer_config` is provided, a trace evaluation will be run for the sub-trace/span tree with the decorated function as the root. See [`TraceScorerConfig`](#tracescorerconfigpy) for more details

```py

# retrieve/create a trace scorer to be used with the TraceScorerConfig

trace_scorer = TracePromptScorer.get(name="sample_trace_scorer")

TraceScorerConfig(

scorer=trace_scorer,

sampling_rate=0.5,

)

```

```py title="trace.py"

from openai import OpenAI

from judgeval.tracer import Tracer

client = OpenAI()

tracer = Tracer(project_name='default_project', deep_tracing=False)

@tracer.observe(span_type="tool")

def search_web(query):

return f"Results for: {query}"

@tracer.observe(span_type="retriever")

def get_database(query):

return f"Database results for: {query}"

@tracer.observe(span_type="function")

def run_agent(user_query):

# Use tools based on query

if "database" in user_query:

info = get_database(user_query)

else:

info = search_web(user_query)

prompt = f"Context: {info}, Question: {user_query}"

# Generate response

response = client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": prompt}]

)

return response.choices[0].message.content

```

***

### `wrap(){:py}`

Wraps an API client to add tracing capabilities. Supports OpenAI, Together, Anthropic, and Google GenAI clients. Patches methods like `.create{:py}`, Anthropic's `.stream{:py}`, and OpenAI's `.responses.create{:py}` and `.beta.chat.completions.parse{:py}` methods using a wrapper class.

#### `client` \[!toc]

API client to wrap (OpenAI, Anthropic, Together, Google GenAI, Groq)

```py

OpenAI()

```

```py title="wrapped_api_client.py"

from openai import OpenAI

from judgeval.tracer import wrap

client = OpenAI()

wrapped_client = wrap(client)

# All API calls are now automatically traced

response = wrapped_client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": "Hello"}]

)

# Streaming calls are also traced

stream = wrapped_client.chat.completions.create(

model="gpt-5",

messages=[{"role": "user", "content": "Hello"}],

stream=True

)

```

***

### `tracer.async_evaluate(){:py}`

Runs quality evaluations on the current trace/span using specified scorers. You can provide either an Example object or individual evaluation parameters (input, actual\_output, etc.).

#### `scorer` \[!toc]

A evaluation scorer to run. See [Configuration Types](/sdk-reference/data-types/config-types) for available scorer options.

```py

FaithfulnessScorer()

```

#### `example` \[!toc]

Example object containing evaluation data. See [Example](/sdk-reference/data-types/core-types#example) for structure details.

#### `model` \[!toc]

Model name for evaluation

```py

"gpt-5"

```

#### `sampling_rate` \[!toc]

A float between 0 and 1 representing the chance the eval should be sampled

```py

0.75 # Eval occurs 75% of the time

```

```py title="async_evaluate.py"

from judgeval.scorers import AnswerRelevancyScorer

from judgeval.data import Example

from judgeval.tracer import Tracer

judgment = Tracer(project_name="default_project")

@judgment.observe(span_type="function")

def agent(question: str) -> str:

answer = "Paris is the capital of France"

# Create example object

example = Example(

input=question,

actual_output=answer,

)

# Evaluate using Example

judgment.async_evaluate(

scorer=AnswerRelevancyScorer(threshold=0.5),

example=example,

model="gpt-5",

sampling_rate=0.9

)

return answer

if __name__ == "__main__":

print(agent("What is the capital of France?"))

```

***

## Multi-Agent Monitoring

### `@tracer.agent(){:py}`

Method decorator for agentic systems that assigns an identifier to each agent and enables tracking of their internal state variables. Essential for monitoring and debugging single or multi-agent systems where you need to track each agent's behavior and state separately. This decorator should be used on the entry point method of your agent class.

#### `identifier` \[!toc]

The identifier to associate with the class whose method is decorated. This will be used as the instance name in traces.

```py

"id"

```

```py title="agent.py"

from judgeval.tracer import Tracer

judgment = Tracer(project_name="default_project")

class TravelAgent:

def __init__(self, id):

self.id = id

@judgment.observe(span_type="tool")

def book_flight(self, destination):

return f"Flight booked to {destination}!"

@judgment.agent(identifier="id")

@judgment.observe(span_type="function")

def invoke_agent(self, destination):

flight_info = self.book_flight(destination)

return f"Here is your requested flight info: {flight_info}"

if __name__ == "__main__":

agent = TravelAgent("travel_agent_1")

print(agent.invoke_agent("Paris"))

agent2 = TravelAgent("travel_agent_2")

print(agent2.invoke_agent("New York"))

```

***

### Multi-Agent System Tracing

When working with multi-agent systems, use the `@judgment.agent()` decorator to track which agent is responsible for each tool call in your trace.

Only decorate the **entry point method** of each agent with `@judgment.agent()` and `@judgment.observe()`. Other methods within the same agent only need `@judgment.observe()`.

Here's a complete multi-agent system example with a flat folder structure:

```python title="main.py"

from planning_agent import PlanningAgent

if __name__ == "__main__":

planning_agent = PlanningAgent("planner-1")

goal = "Build a multi-agent system"

result = planning_agent.plan(goal)

print(result)

```

```python title="utils.py"

from judgeval.tracer import Tracer

judgment = Tracer(project_name="multi-agent-system")

```

```python title="planning_agent.py"

from utils import judgment

from research_agent import ResearchAgent

from task_agent import TaskAgent

class PlanningAgent:

def __init__(self, id):

self.id = id

@judgment.agent() # Only add @judgment.agent() to the entry point function of the agent

@judgment.observe()

def invoke_agent(self, goal):

print(f"Agent {self.id} is planning for goal: {goal}")

research_agent = ResearchAgent("Researcher1")

task_agent = TaskAgent("Tasker1")

research_results = research_agent.invoke_agent(goal)

task_result = task_agent.invoke_agent(research_results)

return f"Results from planning and executing for goal '{goal}': {task_result}"

@judgment.observe() # No need to add @judgment.agent() here

def random_tool(self):

pass

```

```python title="research_agent.py"

from utils import judgment

class ResearchAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, topic):

return f"Research notes for topic: {topic}: Findings and insights include..."

```

```python title="task_agent.py"

from utils import judgment

class TaskAgent:

def __init__(self, id):

self.id = id

@judgment.agent()

@judgment.observe()

def invoke_agent(self, task):

result = f"Performed task: {task}, here are the results: Results include..."

return result

```

The trace will show up in the Judgment platform clearly indicating which agent called which method:

Each agent's tool calls are clearly associated with their respective classes, making it easy to follow the execution flow across your multi-agent system.

***

### `TraceScorerConfig(){:py}`

Initialize a `TraceScorerConfig` object for running an evaluation on the trace.

#### `scorer`

The scorer to run on the trace

#### `model`

Model name for evaluation

```py

"gpt-4.1"

```

#### `sampling_rate`

A float between 0 and 1 representing the chance the eval should be sampled

```py

0.75 # Eval occurs 75% of the time

```

#### `run_condition`

A function that returns a boolean indicating whether the eval should be run. When `TraceScorerConfig` is used in `@tracer.observe()`, `run_condition` is called with the decorated function's arguments

```py

lambda x: x > 10

```

For the above example, if this `TraceScorerConfig` instance is passed into a `@tracer.observe()` that decorates a function taking `x` as an argument, then the trace eval will only run if `x > 10` when the decorated function is called

```py title="trace_scorer_config.py"

judgment = Tracer(project_name="default_project")

# Retrieve a trace scorer to be used with the TraceScorerConfig

trace_scorer = TracePromptScorer.get(name="sample_trace_scorer")

# A trace eval is only triggered if process_request() is called with x > 10

@judgment.observe(span_type="function", scorer_config=TraceScorerConfig(

scorer=trace_scorer,

sampling_rate=1.0,

run_condition=lambda x: x > 10

))

def process_request(x):

return x + 1

```

In the above example, a trace eval will be run for the trace/sub-trace with the process\_request() function as the root.

***

## Current Span Access

### `tracer.get_current_span(){:py}`

Returns the current span object for direct access to span properties and methods, useful for debugging and inspection.

### Available Span Properties

The current span object provides these properties for inspection and debugging:

| Property |

Type |

Description |

| span\_id |

str |

Unique identifier for this span |

| trace\_id |

str |

ID of the parent trace |

| function |

str |

Name of the function being traced |

| span\_type |

str |

Type of span ("span", "tool", "llm", "evaluation", "chain") |

| inputs |

dict |

Input parameters for this span |

| output |

Any |

Output/result of the span execution |

| duration |

float |

Execution time in seconds |

| depth |

int |

Nesting depth in the trace hierarchy |

| parent\_span\_id |

str | None |

ID of the parent span (if nested) |

| agent\_name |

str | None |

Name of the agent executing this span |

| has\_evaluation |

bool |

Whether this span has evaluation runs |

| evaluation\_runs |

List\[EvaluationRun] |

List of evaluations run on this span |

| usage |

TraceUsage | None |

Token usage and cost information |

| error |

Dict\[str, Any] | None |

Error information if span failed |

| state\_before |

dict | None |

Agent state before execution |

| state\_after |

dict | None |

Agent state after execution |

### Example Usage

```python

@tracer.observe(span_type="tool")

def debug_tool(query):

span = tracer.get_current_span()

if span:

# Access span properties for debugging

print(f"🔧 Executing {span.function} (ID: {span.span_id})")

print(f"📊 Depth: {span.depth}, Type: {span.span_type}")

print(f"📥 Inputs: {span.inputs}")

# Check parent relationship

if span.parent_span_id:

print(f"👆 Parent span: {span.parent_span_id}")

# Monitor execution state

if span.agent_name:

print(f"🤖 Agent: {span.agent_name}")

result = perform_search(query)

# Check span after execution

if span:

print(f"📤 Output: {span.output}")

print(f"⏱️ Duration: {span.duration}s")

if span.has_evaluation:

print(f"✅ Has {len(span.evaluation_runs)} evaluations")

if span.error:

print(f"❌ Error: {span.error}")

return result

```

## Getting Started

```python

from judgeval import Tracer

# Initialize tracer

tracer = Tracer(

api_key="your_api_key",

project_name="default_project"

)

# Basic function tracing

@tracer.observe(span_type="agent")

def my_agent(query):

tracer.update_metadata({"user_query": query})

result = process_query(query)

tracer.log("Processing completed", label="info")

return result

# Auto-trace LLM calls

from openai import OpenAI

from judgeval import wrap

client = wrap(OpenAI())

response = client.chat.completions.create(...) # Automatically traced

```

# v0.1 Release Notes (July 1, 2025)

URL: /changelog/v0.01

***

## title: "v0.1 Release Notes (July 1, 2025)"

## New Features

#### Trace Management

* **Custom Trace Tagging**: Add and remove custom tags on individual traces to better organize and categorize your trace data (e.g., environment, feature, or workflow type)

## Fixes

#### Improved Markdown Display

Fixed layout issues where markdown content wasn't properly fitting container width, improving readability.

## Improvements

No improvements in this release.

## New Features

#### Enhanced Prompt Scorer Integration

* **Automatic Database Sync**: Prompt scorers automatically push to database when created or updated through the SDK. [Learn about PromptScorers →](https://docs.judgmentlabs.ai/documentation/evaluation/scorers/prompt-scorer)

* **Smart Initialization**: Initialize ClassifierScorer objects with automatic slug generation or fetch existing scorers from database using slugs

## Fixes

No bug fixes in this release.

## Improvements

#### Performance

* **Faster Evaluations**: All evaluations now route through optimized async worker servers for improved experiment speed

* **Industry-Standard Span Export**: Migrated to batch OpenTelemetry span exporter in C++ from custom Python implementation for better reliability, scalability, and throughput

* **Enhanced Network Resilience**: Added intelligent timeout handling for network requests, preventing blocking threads and potential starvation in production environments

* **Advanced Span Lifecycle Management**: Improved span object lifecycle management for better span ingestion event handling

#### Developer Experience

* **Updated Cursor Rules**: Enhanced Cursor integration rules to assist with building agents using Judgeval. [Set up Cursor rules →](https://docs.judgmentlabs.ai/documentation/developer-tools/cursor/cursor-rules#cursor-rules-file)

#### User Experience

* **Consistent Error Pages**: Standardized error and not-found page designs across the platform for a more polished user experience

## New Features

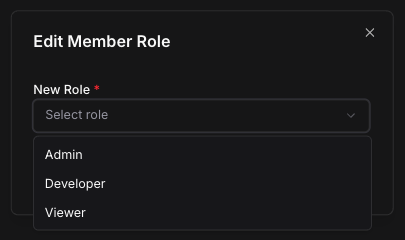

#### Role-Based Access Control

* **Multi-Tier Permissions**: Implement viewer, developer, admin, and owner roles to control user access within organizations

* **Granular Access Control**: Viewers get read-only access to non-sensitive data, developers handle all non-administrative tasks, with finer controls coming soon

#### Customer Usage Analytics

* **Usage Monitoring Dashboard**: Track and monitor customer usage trends with visual graphs showing usage vs time and top customers by cost and token consumption

* **SDK Customer ID Assignment**: Set customer id to track customer usage by using `tracer.set_customer_id()`. [Track customer LLM usage →](https://docs.judgmentlabs.ai/documentation/tracing/metadata#metadata-options)

#### API Integrations

* **Enhanced Token Tracking**: Added support for input cache tokens across OpenAI, Gemini, and Anthropic APIs

* **Together API Support**: Extended `wrap()` functionality to include Together API clients. [Set up Together tracing →](https://docs.judgmentlabs.ai/documentation/tracing/introduction#tracing)

## Fixes

No bug fixes in this release.

## Improvements

#### Platform Reliability

* **Standardized Parameters**: Consistent naming conventions across evaluation and tracing methods

* **Improved Database Performance**: Optimized trace span ingestion for increased throughput and decreased latency

### Initial Release

* Initial platform launch!

# v0.2 Release Notes (July 23, 2025)

URL: /changelog/v0.02

***

## title: "v0.2 Release Notes (July 23, 2025)"

## New Features

#### Multi-Agent System Support

* **Multi-Agent System Tracing**: Enhanced trace view with agent tags displaying agent names when provided for better multi-agent workflow visibility

#### Organization Management

* **Smart Creation Dialogs**: When creating new projects or organizations, the name field automatically fills with your current search term, speeding up the creation process

* **Enhanced Search**: Improved search functionality in project and organization dropdowns for more accurate filtering

* **Streamlined Organization Setup**: Added create organization option and "view all workspaces" directly from dropdown menus

## Fixes

No bug fixes in this release.

## Improvements

#### User Experience

* **Keyboard Navigation**: Navigate through trace data using arrow keys when viewing trace details in the popout window

* **Visual Clarity**: Added row highlighting to clearly show which trace is currently open in the detailed view

* **Better Error Handling**: Clear error messages when project creation fails, with automatic navigation to newly created projects on success

#### Performance

* **Faster API Responses**: Enabled Gzip compression for API responses, reducing data transfer sizes and improving load times across the platform

# v0.3 Release Notes (July 29, 2025)

URL: /changelog/v0.03

***

## title: "v0.3 Release Notes (July 29, 2025)"

## New Features

#### Error Investigation Workflow

Click on errors in the dashboard table to automatically navigate to erroneous trace for detailed debugging.

## Fixes

No bug fixes in this release.

## Improvements

No improvements in this release.

## New Features

No new features in this release.

## Fixes

#### Bug fixes and stability improvements

Various bug fixes and stability improvements.

## Improvements

No improvements in this release.

## New Features

#### Client Integrations

* **Groq Client Integration**: Added `wrap()` support for Groq clients with automatic token usage tracking and cost monitoring. [Set up Groq tracing →](https://docs.judgmentlabs.ai/documentation/tracing/introduction#tracing)

#### Enhanced Examples

* **Flexible Example Objects**: Examples now support custom fields, making it easy to define data objects that represent your scenario. [Define custom Examples →](https://docs.judgmentlabs.ai/documentation/evaluation/scorers/custom-scorers#define-your-custom-example-class)

## Fixes

No bug fixes in this release.

## Improvements

#### Performance

* **Faster JSON Processing**: Migrated to orjson for significantly improved performance when handling large datasets and trace data

#### User Experience

* **Smart Navigation**: Automatically redirects you to your most recently used project and organization when logging in or accessing the platform

# v0.4 Release Notes (Aug 1, 2025)

URL: /changelog/v0.04

***

## title: "v0.4 Release Notes (Aug 1, 2025)"

## New Features

#### Enhanced Rules Engine

* **PromptScorer Rules**: Use your PromptScorers as metrics in automated rules, enabling rule-based actions triggered by your custom scoring logic. [Configure rules with PromptScorers →](https://docs.judgmentlabs.ai/documentation/performance/alerts/rules#rule-configuration)

#### Access Control Enhancement

* **New Viewer Role**: Added a read-only role that provides access to view dashboards, traces, evaluation results, datasets, alerts, and other platform data without modification privileges - perfect for stakeholders who need visibility without editing access

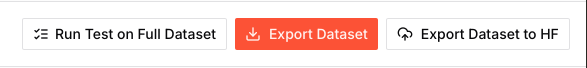

#### Data Exporting

* **Trace Export**: Export selected traces from monitoring and dataset tables as JSONL files for external analysis or archival purposes. [Export traces →](https://docs.judgmentlabs.ai/documentation/evaluation/dataset#export-from-platform-ui)

## Fixes

No bug fixes in this release.

## Improvements

#### Data Management

* **Paginated Trace Fetching**: Implemented efficient pagination for viewing large volumes of traces, making it faster to browse and analyze your monitoring data

* **Multi-Select and Batch Operations**: Select multiple tests and delete them in bulk for more efficient test management

#### Evaluation Expected Behavior

* **Consistent Error Scoring**: Custom scorers that encounter errors now automatically receive a score of 0, ensuring clear identification of failed evaluations in your data

#### Developer Experience

* **Enhanced Logging**: Added detailed logging for PromptScorer database operations to help debug and monitor scorer creation and updates

#### User Experience

* **Enhanced Action Buttons**: Improved selection action bars across all tables with clearer button styling, consistent labeling, and better visual hierarchy for actions like delete and export

* **Streamlined API Key Setup**: Copy API keys and organization IDs as pre-formatted environment variables (`JUDGMENT_API_KEY` and `JUDGMENT_ORG_ID`) for faster application configuration

# v0.5 Release Notes (Aug 4, 2025)

URL: /changelog/v0.05

***

## title: "v0.5 Release Notes (Aug 4, 2025)"

## New Features

#### Annotation Queue System

* **Automated Queue Management**: Failed traces are automatically added to an annotation queue for manual review and scoring